Up your application development game in JavaScript (JS) or extended TypeScript (eTS) with the enhanced SDK of API version 8. Thanks to the optimized ArkUI and ArkCompiler, you can spruce up the UI of your JS/eTS applications with sophisticated UI drawing features, and speed up the launch of your JS applications by 50%.

Introduction

An upgrade to the API version 8, together with an addition of around 2800 APIs, brings across-the-board optimizations, covering distributed, phone, network, media, and security. The ample JavaScript APIs make it much easier to develop a well-rounded application. Also, with the W3C canvas standard interfaces and the eTS/H5/C++ hybrid development capability, ArkUI is now more helpful for developing complex applications, such as gaming, media, and browser applications.

Milestone Planning

Version Types

Getting Started

1. Obtaining the 3.0 SDK

Download or update the HarmonyOS SDK through DevEco Studio’s SDK Manager, or manage the HarmonyOS SDK through Command Line Tools.

2. Obtaining the 3.0 Emulator

Make full use of the simulation capability for numerous single device categories and the Super Device. Bid farewell to the hassle caused by a lack of devices and complex network configuration.

Overview

Last updated: 2021-11-04 12:52

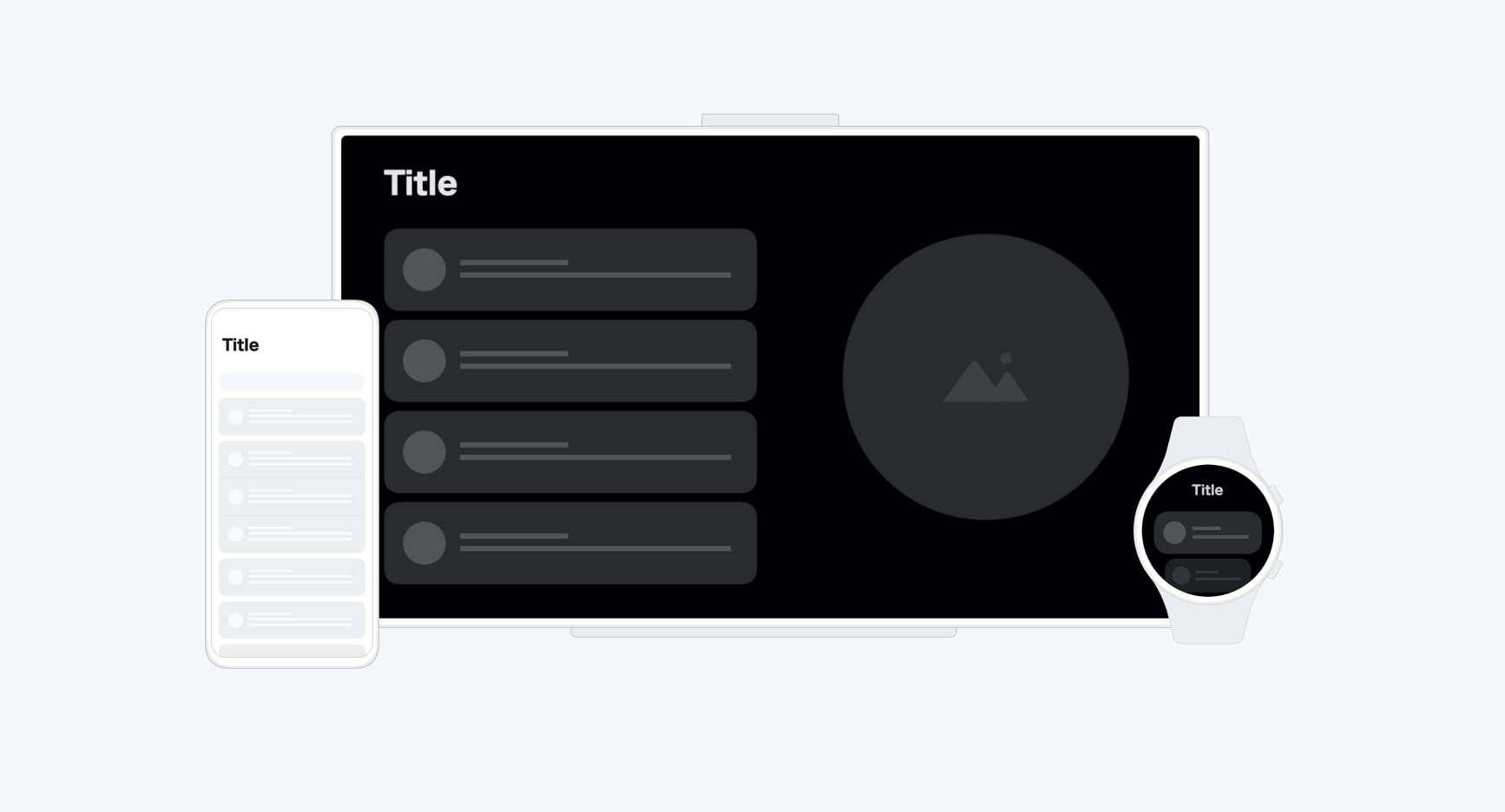

HarmonyOS is a distributed operating system oriented to smart lifestyles in all scenarios. Based on traditional single-device system capabilities, HarmonyOS proposes the concept of a distributed system adapting to multiple devices based on the same set of system capabilities. Such devices include mobile phones, tablets, PCs, smart TVs, wearables, smart speakers, head units, earphones, and AR/VR glasses.

For consumers, HarmonyOS integrates their various smart devices into One Super Device that delivers the best possible interaction experience through ultra-fast connection, multi-device collaboration, and resource sharing between different devices.

During development of applications for multiple types of devices, the following considerations apply to UX design:

Difference

You must fully understand the devices to be supported, including the screen size, interaction mode, user scenarios, and user groups, and carry out design based on device characteristics. For details about the design methods, see the device design guide, such as Vision Design Guide and Wearables Design Guide.

Consistency

In addition to the characteristics of each device, you need to consider the common features of different devices and use general design methods to provide the design that considers device differences and supports cross-device consistency, thereby reducing the learning difficulty for users and lowering the development cost of applications. For details, see the subsequent chapters in this design guide.

Collaboration

When considering the collaboration between multiple devices, you need to understand multiple possible collaboration modes to maximize the unique multi-device seamless switching experience on HarmonyOS. For details about the design methods, see Distributed System Design Guide.

When implementing general design and development for multiple devices that can run on HarmonyOS, consider the following aspects:

- Design philosophy: Understand the core principle of HarmonyOS to ensure a consistent experience on multiple devices.

- Human factors and Ergonomics: Understand how human factors research provides systematic scientific guidance for UX design.

- Application architecture: Understand the common application architectures and put them into practice in application design.

- Human-computer interaction: Understand various human-computer interaction modes and precautions for the design of each interaction mode.

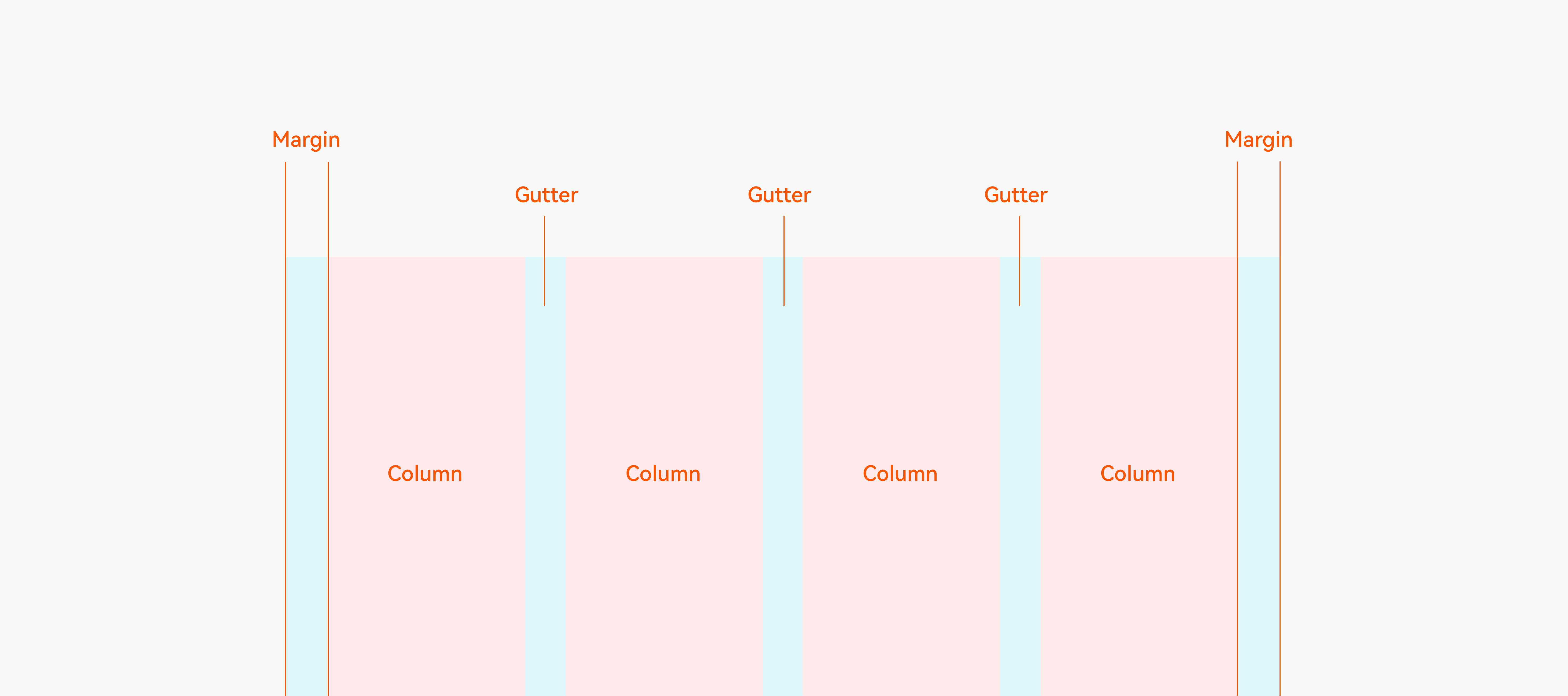

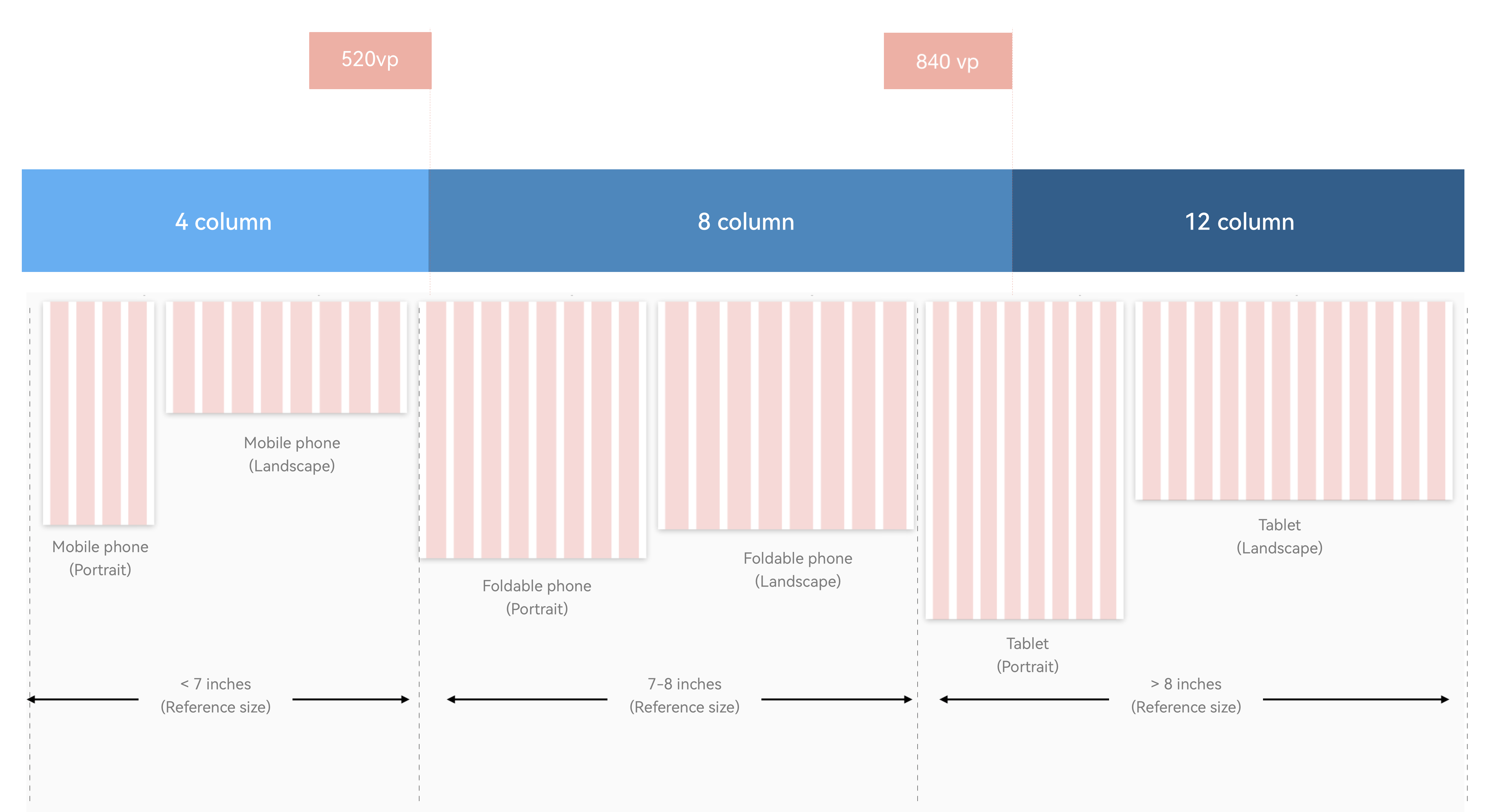

- Visual style: Understand the concepts and design rules for key visual elements, such as the basic concept, color, font, icon, illustration, and layout.

- Motions: Understand the basic motion elements (such as duration, curve, and frame rate), as well as the design methods for brand motion, transition motion, gesture motion, micro motion, and illustration motion.

- Sound: Understand the design attributes of sound and the design methods of key sound elements, such as timbre, acoustics, frequency band, and dynamic response, on different devices.

- Polymorphic controls: Understand the characteristics and display status of polymorphic controls on different devices to ensure the correct use of these controls.

- UI language: Understand how to ensure that the language style is consistent, clear, smooth, and natural to bring a good reading experience to users.

- Globalization: Understand how to provide general solutions to meet the common requirements of different countries and how to provide personalized solutions to meet the special requirements of certain countries.

- Accessibility: Understand how to enable visually impaired users to use smart devices and obtain a good information accessibility experience in color, contrast, screen reader, and other aspects.

- Privacy design: Understand how to protect user privacy and allow users to manage and control their own information.

Next

Design Philosophy

Last updated: 2021-11-04 12:52

In the era of IoE, we are exposed to different types of devices every day. Each type of device can solve specific problems in specific scenarios. While it seems that we can do a lot more things, each type of device is actually isolated when being used and the services provided are limited to the specific devices. This does not make our lives more convenient. Rather, it makes our lives more complex. HarmonyOS was introduced to solve these problems, allowing a return to simple living in a complex world and establishing a balance with everything connected.

As Tao Te Ching said, Tao begat one; one begat two; two begat three; three begat all things. HarmonyOS is developed to build a harmonious digital world — One Harmonious Universe — for all users.

5249

One

All things come back to their roots. With a focus on people-first design, we explore the human factors behind experience through rigorous experiments and incorporate the conclusions into the design.

HarmonyOS should behave in a way that meets the essential needs of human beings. Based on sufficient human factors research and in order to ensure a comfortable experience on multiple devices in all scenarios, the entire system offers clear and legible texts of various sizes, precise and clear icons, comfortable and coordinated colors, and smooth and vivid motion effects. In addition, the UI elements are hierarchically clear and interactive, and the important contents of the interface can be highlighted in a smart way. The system should be intuitive so that users can use it easily. Therefore, system operations must comply with human instinct and use intelligent technical capabilities to actively adapt to user habits.

Harmonious

The blending of the two results in harmony. All things have two sides: virtual and real, yin and yang, positive and negative, and so on. Two forces drive each other and balance each other to reach a state of peace.

In HarmonyOS, we want to bring a harmonious visual experience to users. We find the mapping against the digital world in the physical world and convert it to interface design through light, shadow, and material design in order to bring a high-quality visual experience to users. In addition, experience memory in the physical world is transferred to the virtual world. Familiar impressions help users quickly understand UI elements and perform corresponding operations.

Universe

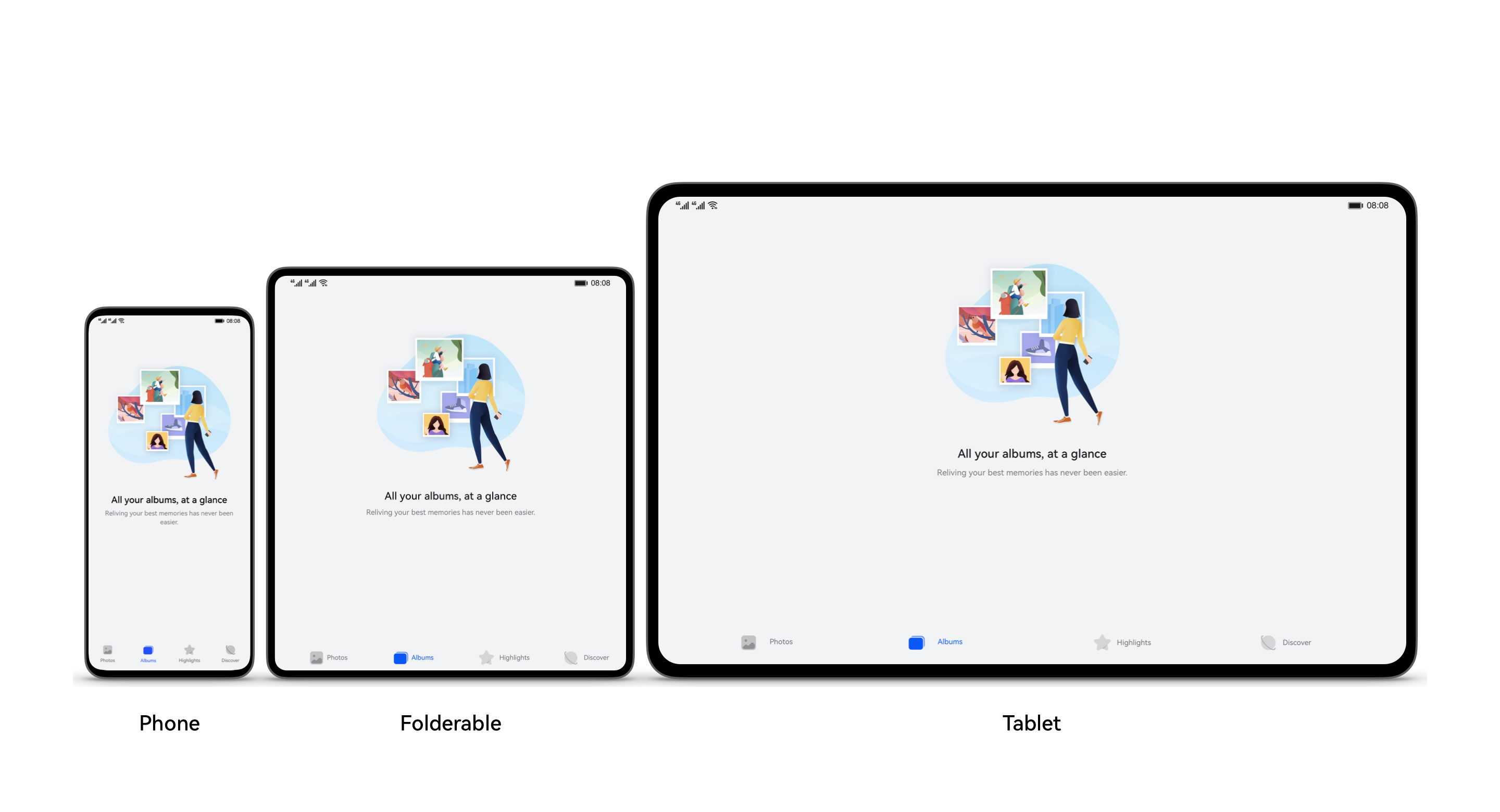

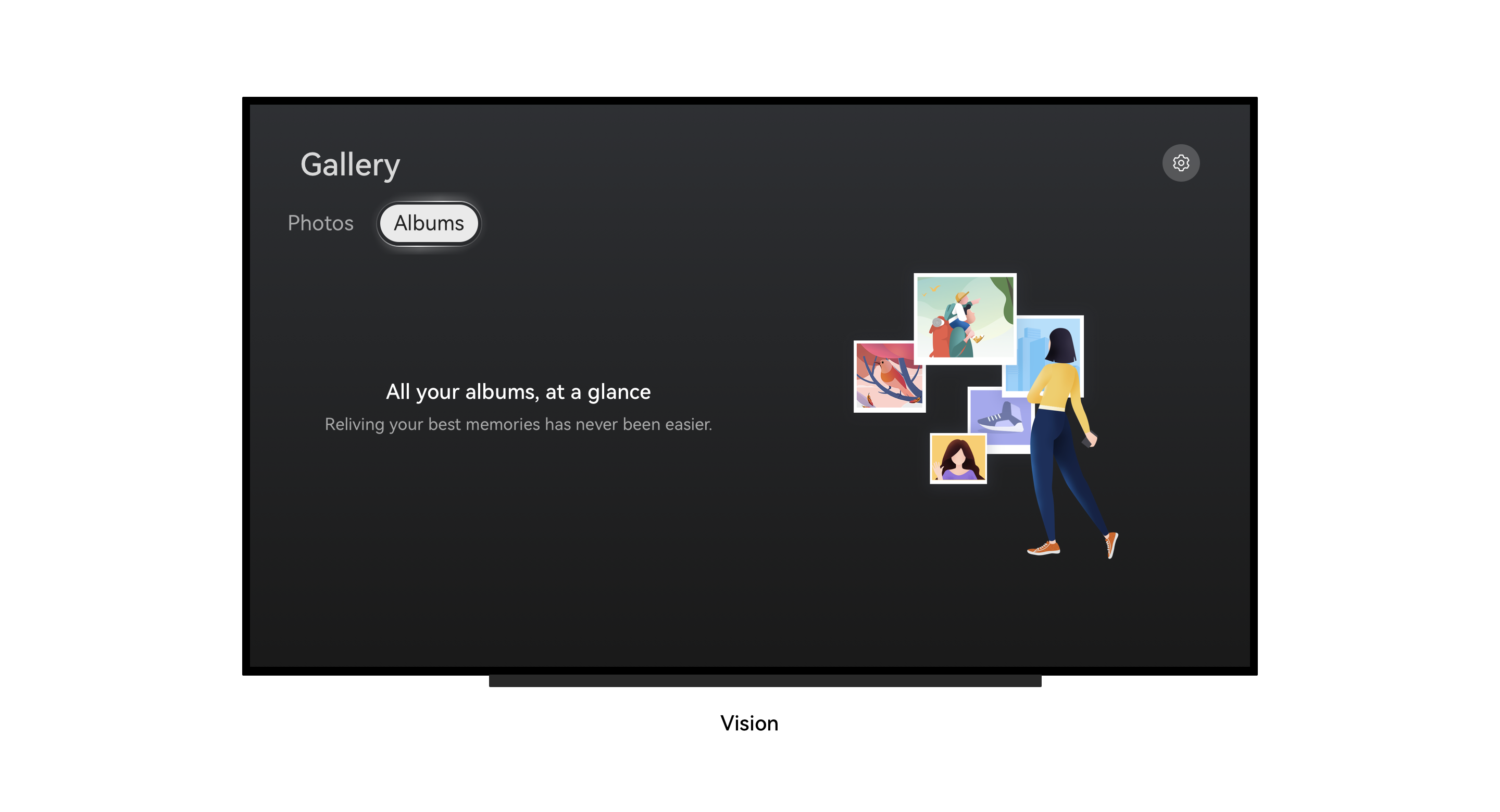

Three begat all things which would transform accordingly. HarmonyOS is an operating system oriented to a multi-device experience. Therefore, providing a comfortable and convenient multi-device operation experience for users is a core point that distinguishes HarmonyOS from other operating systems.

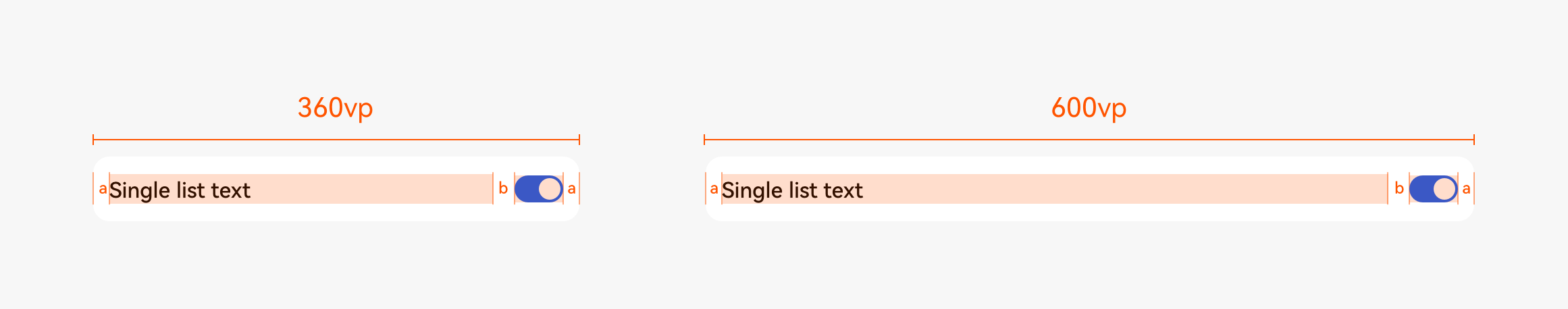

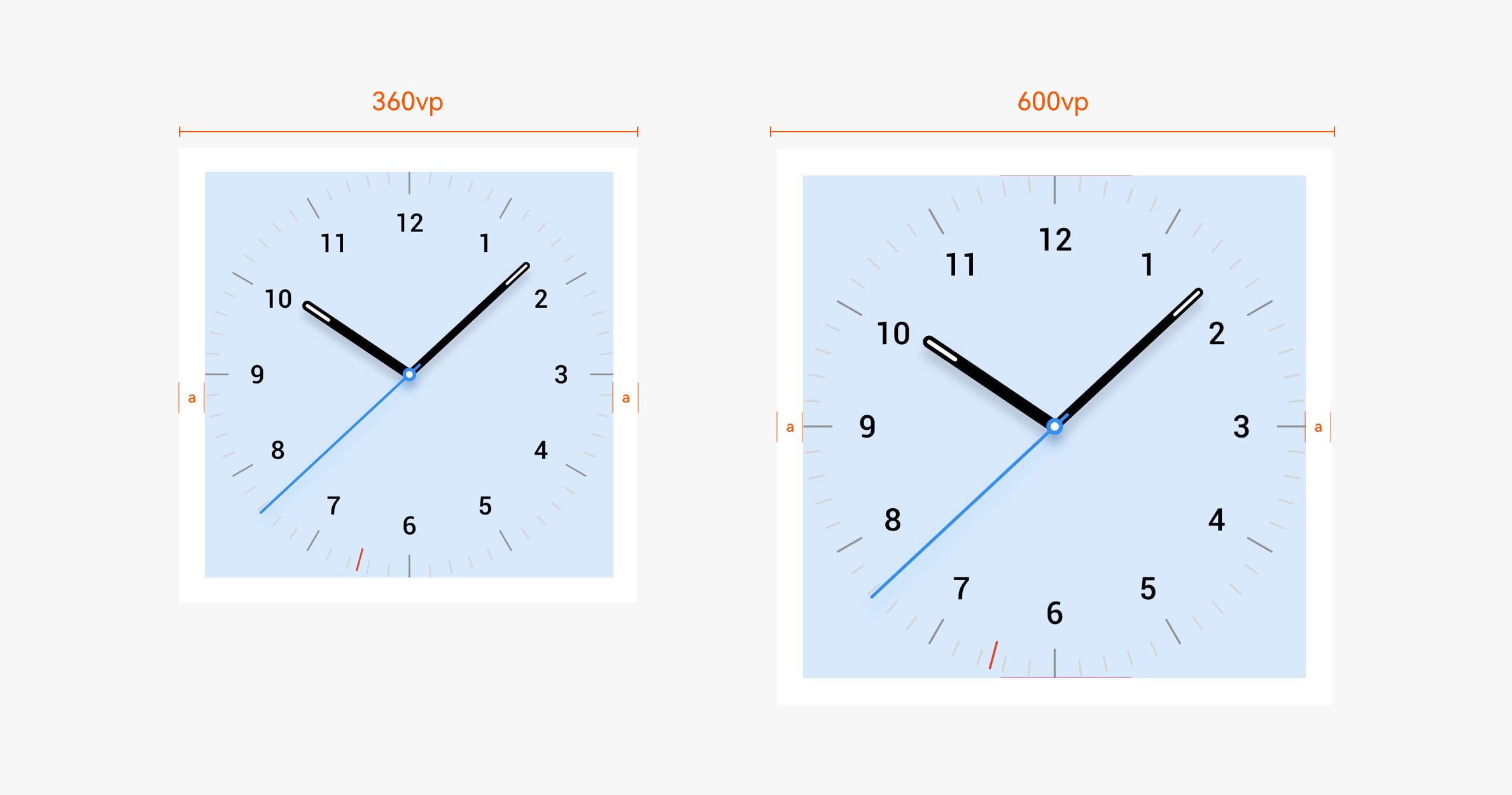

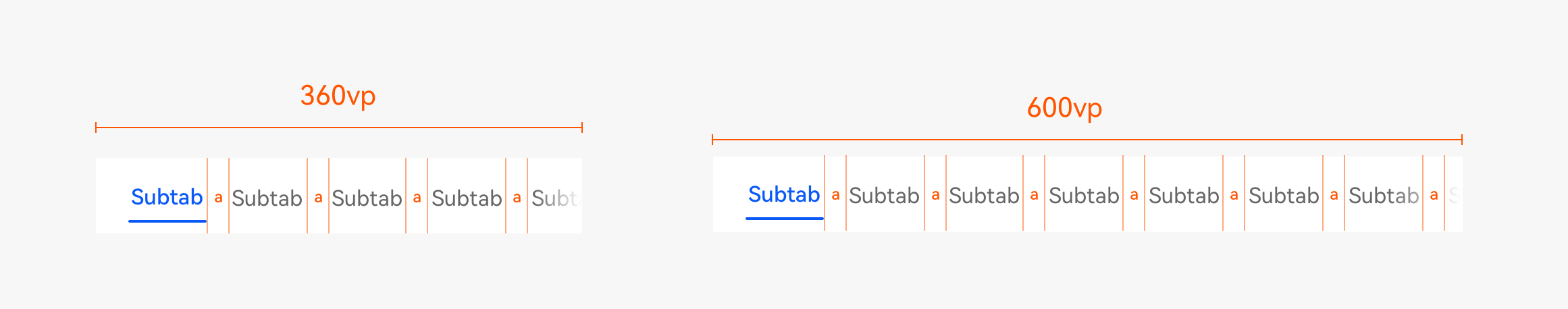

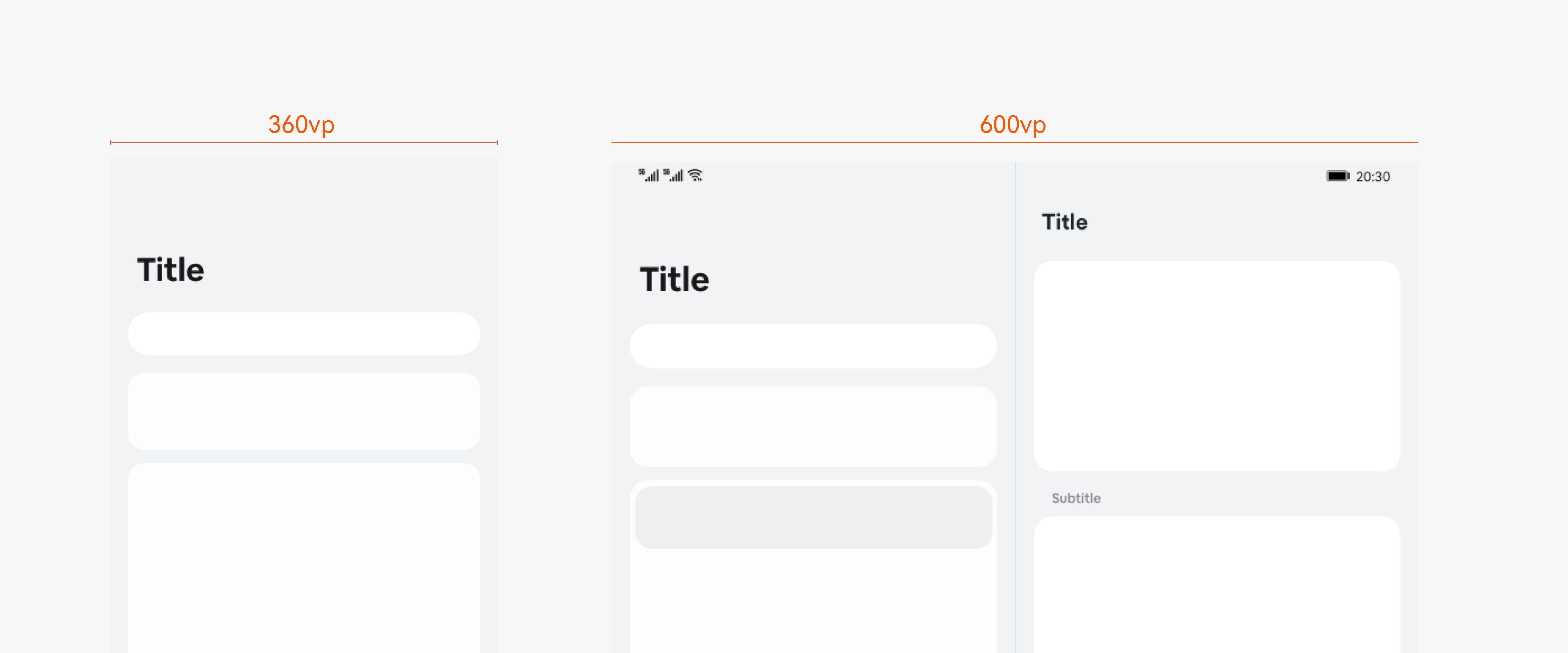

On the one hand, the interface/component design needs to have good adaptability to quickly develop screens of different sizes.

On the other hand, we hope that the experience of multiple devices can achieve a good balance between consistency and difference.

● Consistency: The UI element design and interaction mode should be consistent as much as possible to reduce the learning cost of users.

● Difference: Different types of devices vary in form, screen size, external hardware, and operating environment. To provide users with a proper operation experience, a differentiated design for different types of devices is needed.

Additionally, HarmonyOS is an operating system oriented to global users. To enable more users to enjoy convenient technology and a pleasant experience, active exploration and reflection will be carried out in various aspects including digital health, globalization, and accessibility.

Previous

Next

Human Factors and Ergonomics

Last updated: 2021-11-04 12:52

Human factors and ergonomics is a subject that studies the interaction between human beings and other elements of the system. This subject applies the related theories, principles, data, and methods to fulfill the goals of human health and optimal system efficiency (International Ergonomics Association, IEA 2009).

In HarmonyOS, a large amount of human factors research is carried out to provide systematic scientific guidance for UX design.

Common keywords:

- Human factors, ergonomics, and engineering psychology

Human Factors and Ergonomics Fields

There are three main research fields:

Physical Ergonomics

This field investigates the characteristics related to the physical movement of human body, such as anatomy, anthropometry, physiology, and biomechanics. It is mainly used in domains such as operation posture, material handling, repetitive action, operational injuries, workspace design, safety, and health.

Cognitive Ergonomics

This field investigates the psychological processing in the interaction between human beings and other factors in the system, such as perception, memory, learning, and reasoning. It is mainly used in domains such as cognitive load, decision-making, experience formation, human-machine interaction, human reliability, work pressure, and skill transfer.

Organizational Ergonomics

This field examines the optimization of social technical systems, including organizational structure, policies and regulations, and management processes. It is mainly used in areas such as communications, staff management, work design, work team, community work efficiency, team collaboration, and virtual team work.

Development Trend of Human Factors and Ergonomics

Human factors and ergonomics began at the end of the 19th century. Human beings learned how to adapt to machines from their initial practical experience, gradually developed to the current stage, and used advanced and mature technologies to scientifically measure problems in human-machine-environment in order to achieve a harmonious development situation where human beings and machines adapt to each other.

Source: www.flaticon.com

UX Human Factors Research Framework

Human factors and ergonomics usually analyzes design problems from three aspects, namely, human, machine, and environment, and carries out the corresponding human factors experiments to promote design iteration and achieve the corresponding user experience objectives.

- Human: Consider factors, such as the basic demographic characteristics (such as age and occupation) of users, usage postures and perspectives of different devices, and occupation of cognitive resources when using devices.

- Machine: Consider the device size, device display capability (such as resolution and color gamut), interaction mode (such as touch and voice), interaction duration, and other items.

- Environment: Consider factors, such as the physical environment (such as ambient light, ambient sound, and distance) and social environment (private or public equipment).

Achievements of UX Human Factors Research

The existing UX human factors research is mainly carried out in two fields: basic UX and HarmonyOS UX. The following takes a typical case as an example.

Human Factors Research on Basic UX

Research has been carried out in fields such as GUI experience, fluency experience, interaction experience, and multi-sensory experience. Taking GUI experience as an example, the research aims to fulfill the experience goals in legibility, readability, and simplicity on multiple devices.

Human Factors Research on HarmonyOS UX

Research has been carried out mainly in fields such as all-scenario UX human factors and multi-device UX human factors. The multi-device UX human factors research covers devices such as mobile phones, foldable screens, tablets, PCs, smart TVs, wearables, headsets, and VR&AR glasses.

Taking “GUI experience research” as an example, the following expounds the human factors research methods and some research results.

Research Method

- In the research of the GUI field, the first consideration is on the use distance and field of view of users on different devices, as shown in the following figure.

- Human factors experiments are then carried out to obtain related results through comprehensive analysis of subjective experience, behavioral performance, and physiological data.

Examples of Research Achievements

Example 1: In order to meet the legibility requirements of page visual design in different ambient light environments, human factors experiments are conducted to implement contrast ratio modeling, as shown in the following figure. The result is applied to the “dark mode” design. For details about color design, see Color.

Example 2: To ensure that the page text on the smart TV is clear and legible in ambient light and dark light environments, the following font size suggestions are provided through human factors experiments. For details about the multi-device font size design, see Font.

Knowledge Indexes

[1] Wang Chongming Psychological research methods [M]. People’s Education Press, 2000.

[2] Zhu Zuxiang, Ge Lizhong, and Zhang Zhijun Engineering Psychology[M]. People’s Education Press, 2000.

[3] Mou Shu Engineering Psychology Notes [M]. Commercial Press, 2013

[4] Wickens C D, Gordon S E, and Liu Y D. An Introduction to Human Factors Engineering. Longman, 2004.

Previous

Next

Navigation

Last updated: 2021-11-04 12:52

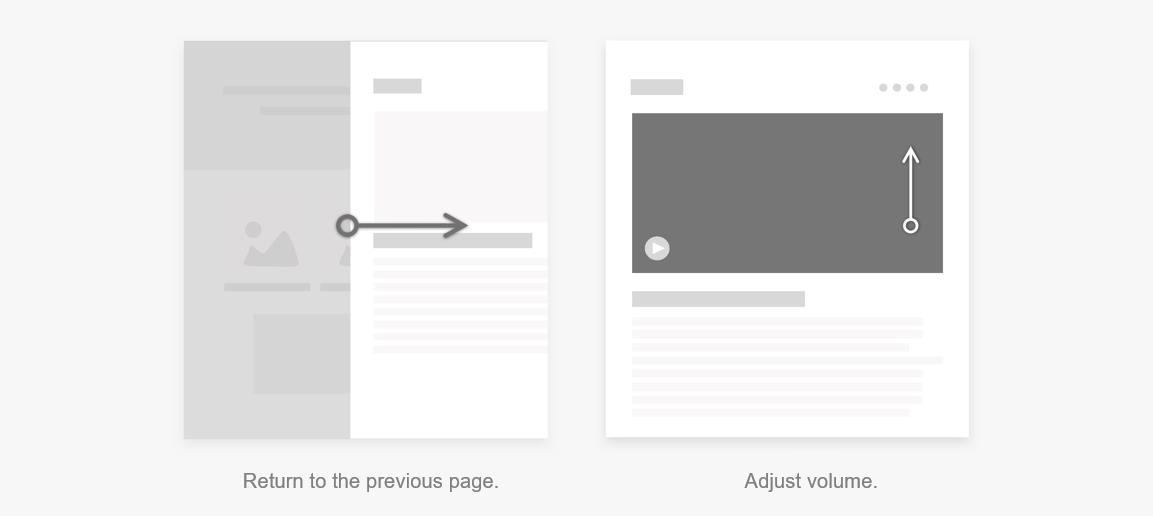

Navigation enables users to move between pages of an application to browse content. A good navigation allows users to know where they are, where they are going, and where they come from.

Navigation Design Rules

Navigation design must comply with the following rules:

- Consistent: The navigation operation results should meet user expectations. The interface layout and controls that users are familiar with are used to ensure consistent navigation behavior in the same or similar scenarios. This allows users to know how to navigate on any page. For example, on a level-2 page, you can click the back button in the upper left corner to return to the upper-level page.

- Clear: A navigation should provide clear paths with simple logical relationships so that users can easily know where they are on the current interface and where they will go after an operation. For example, users can use the bottom tabs to switch between pages of the same level.

Avoid the following aspects in navigation design:

- Too many levels: A maximum of three navigation levels are recommended. Too many levels may lead to low operational efficiency. If in-depth design is required, you are advised to use breadcrumb design or add the function of returning to the home page with one click.

- Complex navigation: In the side navigation, using the bottom tabs makes operations complex. You are advised to use only side navigation.

Classification of Navigations

Common types of application navigations are as follows:

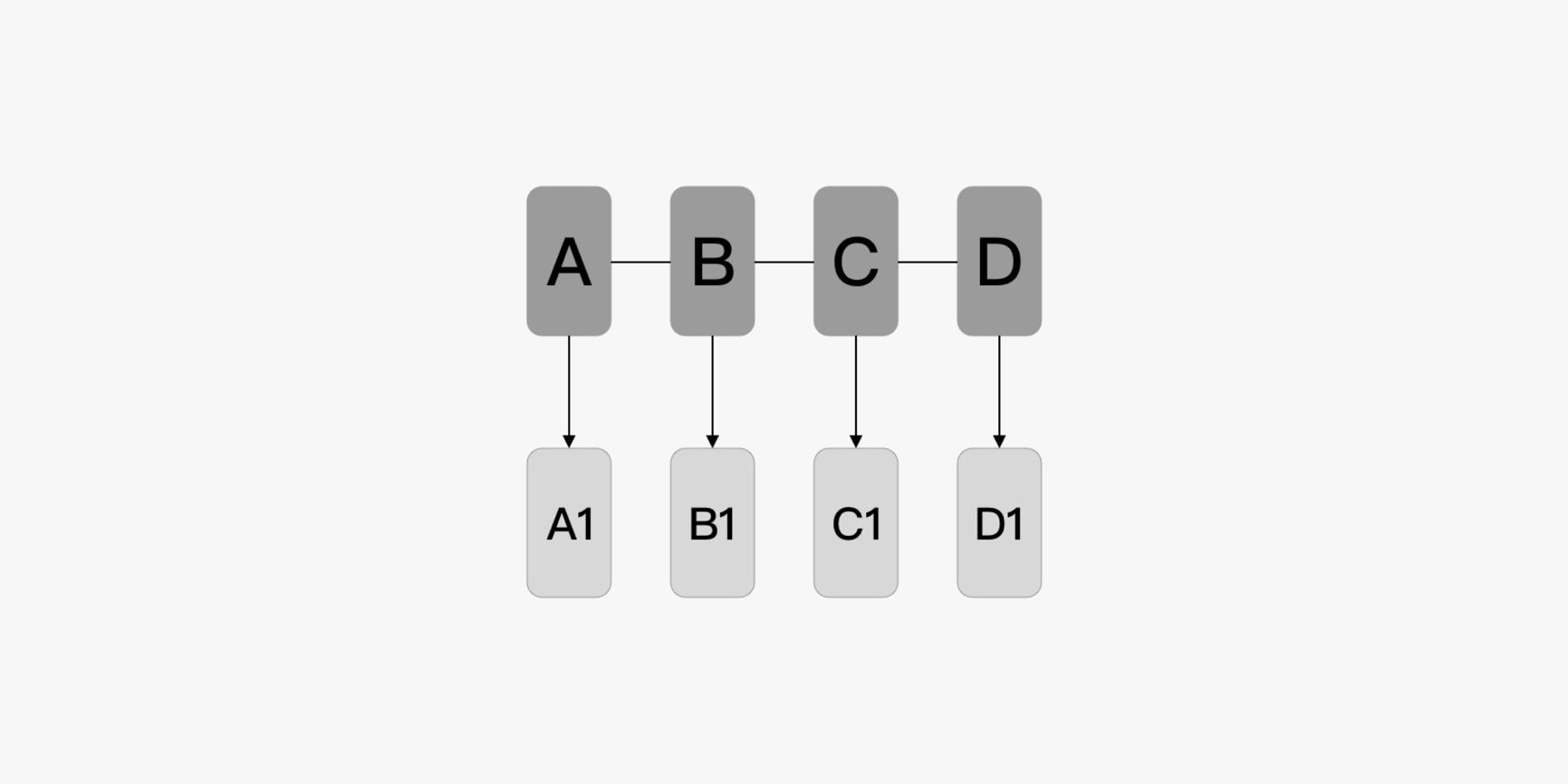

- Flat navigation

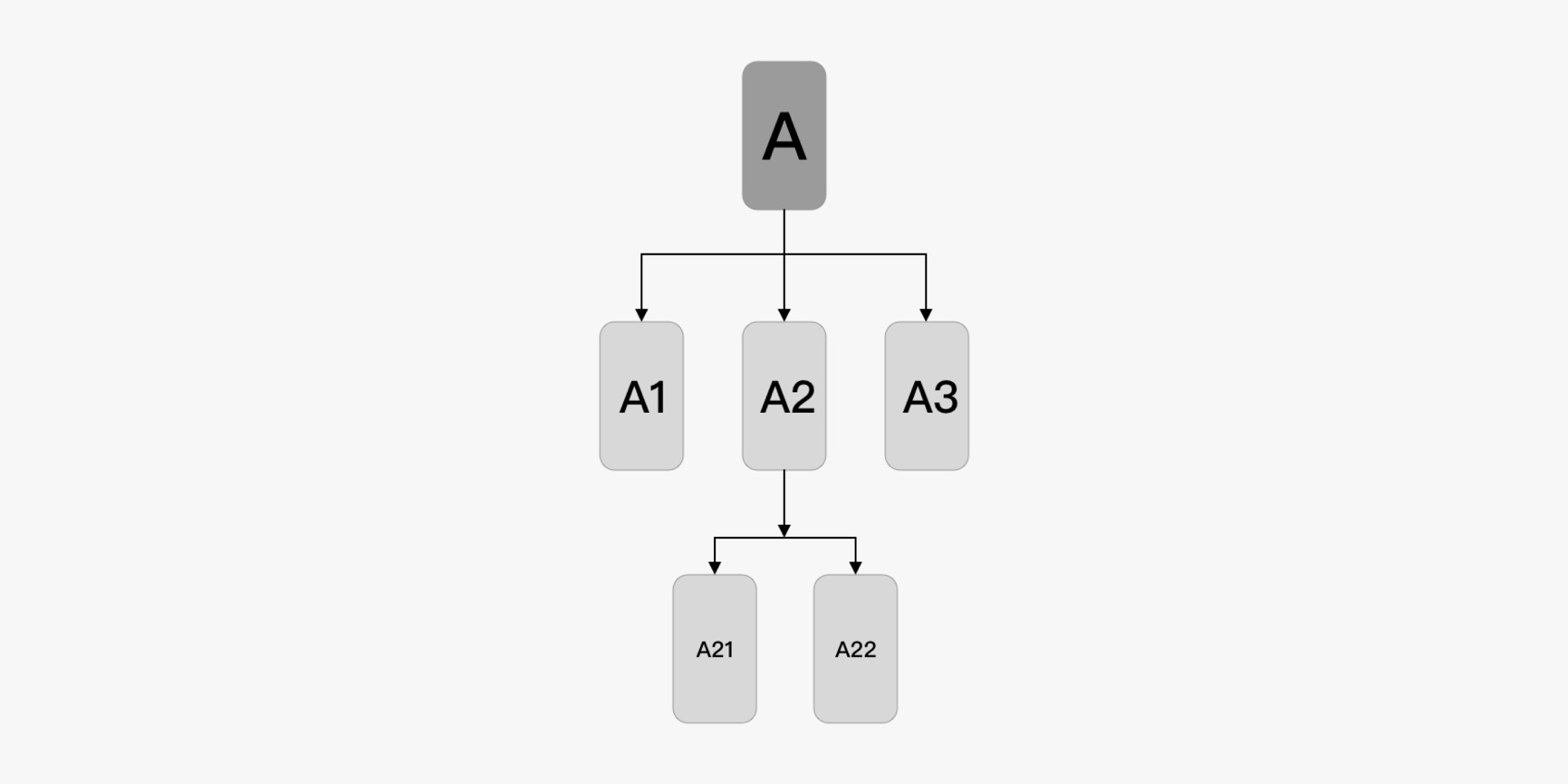

- Hierarchical navigation

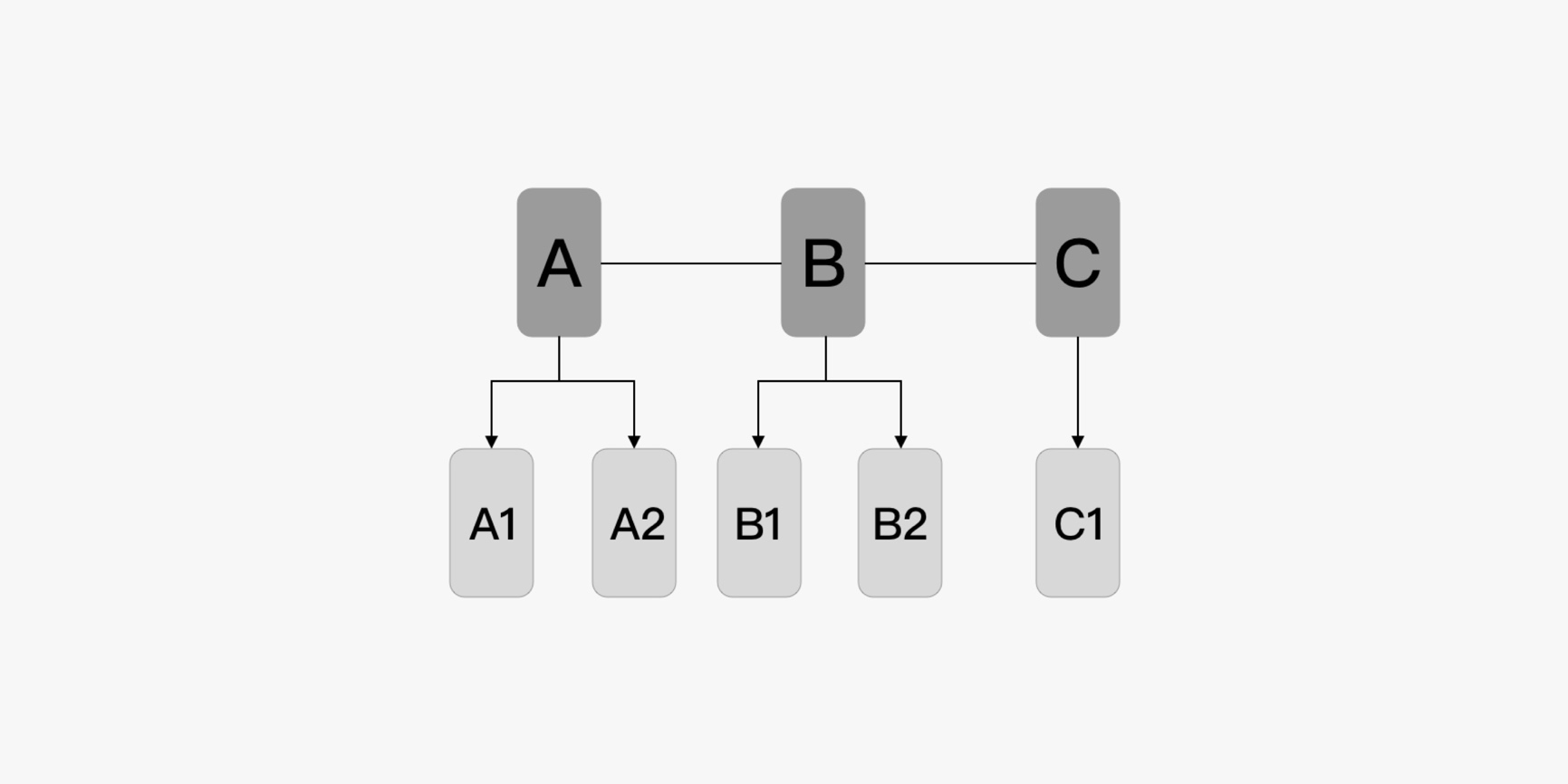

- Hybrid navigation

Flat Navigation

In flat navigation, pages are placed at the same level of hierarchy.

This type of navigation is used to display the user interfaces with pages at the same level. For example, a page consisting of tabs is in flat navigation.

Hierarchical Navigation

Hierarchical navigation consists of parent pages and child pages. A parent page can have one or more child pages. Each child page has its parent page.

Hierarchical navigation applies to complex multi-level structures. However, this navigation creates a long path for users to access deep-structured content in the application’s hierarchy, which decreases users’ access efficiency. To resolve this issue, you can employ cross-hierarchy design, such as shortcuts.

This type of navigation can be used by applications with hierarchical pages.

Hybrid Navigation

When designing an application, you can use the flat navigation for pages at the same level and the hierarchical navigation for pages with complex multi-level structures. This composite use of multiple navigation systems is known as hybrid navigation.

This navigation applies to an application that consists of several modules at the same level, with each module having a hierarchy of pages.

Previous

Next

Common Application Page Structures

Last updated: 2021-11-04 12:52

Common application page structures are as follows:

- Launch page

- Details page

- List view

- Grid view

Launch Page

For a content-oriented application, it takes a period of time to obtain the content of the application homepage. In this case, the launch page is a good choice when users are waiting for the page to load its content. The launch page can display the brand image or advertisement of the application.

- The launch page is used only for content-based applications. When a content-based application is launched, it takes some time to obtain the content. Therefore, a launch page is needed to reduce the sense of waiting for users. The launch page is not needed for applications that do not load network content.

- This prevents users from waiting for a long time. Users are always expecting to see the application content in the first place. Therefore, the content needs to be displayed in a timely manner once the page loading is complete.

- When an application is loaded from the background, the launch page should not be displayed. After the application is switched to the background and then loaded from the background, the launch page should not be displayed again. The application needs to keep its status in the background so that users can continue to browse when necessary.

Details Page

A details page displays detailed description and operations of an application.

List View

A list view is typically used for displaying text and data.

- Data in a list should be sorted based on certain logic to facilitate user browsing and operations. For example, data can be sorted in alphabetical or time order.

- A list should be a collection of items of the same type and the data in the list should be presented in a consistent layout style. Common lists are the single-line list, double-line list, and three-line list.

- The content to be displayed in a list must be hierarchically clear so that users can focus on important information and operations at a glance.

Grid View

A grid view is typically used for displaying images and videos.

- A grid view displays items of the same importance level with a unified layout.

- A grid view primarily organizes content by image. For example, a gallery displays images in a grid view.

- Grid views can be supplemented with texts and operations. For example, in the app store, a grid is used to display application icons, brief description, and download buttons.

- Grid views should consider responsive layouts. When switching between landscape and portrait views, a grid view should be able to scale to fit the width of the page.

Previous

Next

Overview

Last updated: 2021-11-04 12:52

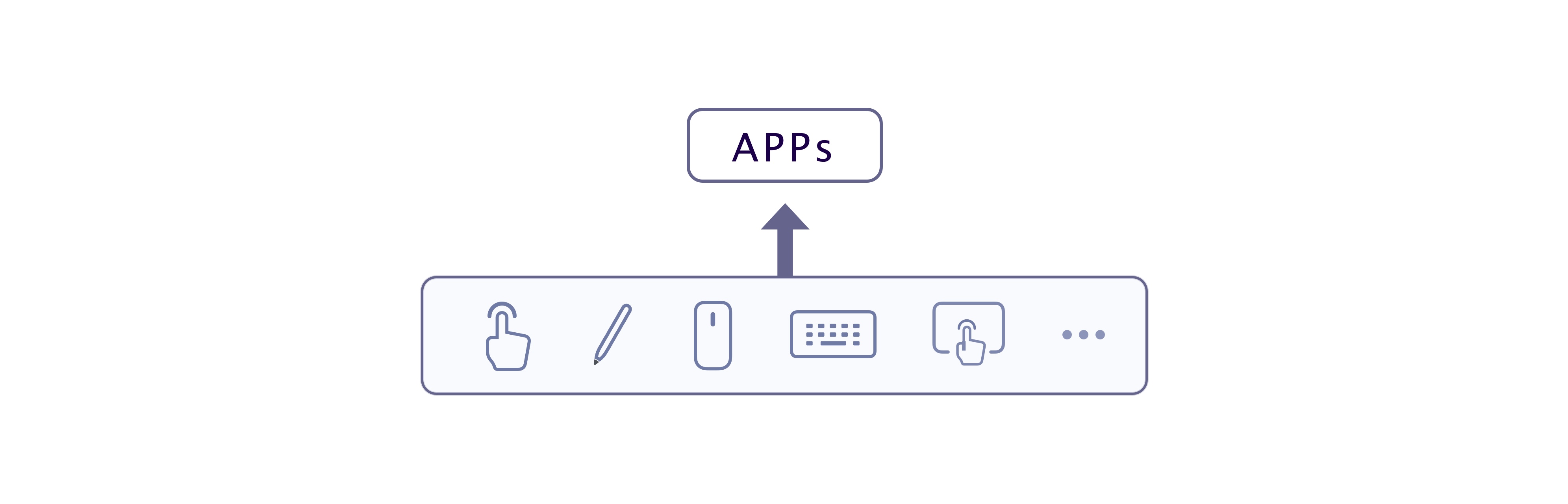

In every digital experience scenario, various types of smart devices are widely available. Interactive user interface is an irreplaceable part for devices such as smartphones, tablets, PCs, smart wearables, televisions, vehicle-mounted devices, virtual reality (VR), and augmented reality (AR). An app can run on multiple types of devices. And users may adopt diversified input modes to interact with the app on a device. Therefore, the user interface must be able to automatically identify and support different input modes, and allow users to interact with the app with ease.

The core principle for HCI in all HarmonyOS scenarios is to provide interaction modes that supported by the input devices to ensure the consistency of user interaction experience. For example, when an app runs on a touchscreen device, a user can touch and hold with a finger to open a context menu. When an app runs on a PC, a user can right-click with a mouse to open the shortcut menu.

There are three common interaction modes: direct interaction by tapping touchscreens with fingers or styluses; indirect interaction by using mouses, touchpads, keyboards, watch crowns, remote controls, vehicle-mounted device joysticks, knobs, handles, or through air gestures; and voice interaction.

When designing and developing apps, designers and developers should consider that users may interact with apps in more than one way, and must ensure that the functions of apps are not affected in different input modes (devices). Apps must be able to respond correctly and interact with users in accordance with user habits.

Previous

Common Application Page Structures

Next

Touch-based Interaction

Last updated: 2021-11-04 12:52

Many devices have screens that support multiple touches and allow users to use fingers or styluses for interaction. The touch status, quantity, and motion on the screens are recognized as touch gestures and operations. Multiple interaction functions and experiences (such as tap, swipe, zoom, and rotate) are supported. In most cases, touch-based interaction is considered as the primary HCI mode. The following describes the gestures of touch-based interaction supported by HarmonyOS:

Basic Gestures

| Gesture | Function | Figure |

|---|---|---|

| Tap | Users can touch an element to trigger a function or open a page. |  |

| Touch and hold | Users can press and hold an element to trigger a menu or a specific mode, or enter a page. The touch-and-hold gesture is not easy to recognize. Do not use it to trigger common functions. Scenarios: Touch and hold to display a pop-up menu. For example, touch and hold a contact in the contact list. Touch and hold to display the shortcut menu. For example, touch and hold an icon on the screen. Touch and hold to select multiple items. It is usually used on the list or grid interface. For example, touch and hold on the message list interface or grid interface. Touch and hold to enter editing or sort mode with vibration. For example, touch and hold the alarm list interface, or touch and hold a blank area on the screen. Touch and hold to select text. For example, in the text box, touch and hold the text you have entered. Touch and hold an icon to enter its function details page. For example, touch and hold a function icon on the notification panel. |  |

| Swipe | Users can scroll a list or move the content on a screen by a swipe. Scenarios: Scroll a list by a swipe. Switch between screens in the content area by a horizontal swipe. Scroll around the map by a swipe. |  |

| Drag | Users can move an element from one location to another. |  |

| Double click | Users can quickly click the mouse twice to zoom in or out on the content, select text, or trigger a specific function. |  |

| Pinch | Users can use two fingers to touch the screen and move them apart to zoom out. Users can use two fingers to touch the screen and bring them close to zoom in. Scenarios: Zoom in/out a picture. For example, zoom in or out a picture on the page for viewing pictures, Zooms in/out on content. For example, zoom in or out on content on a browsing page or a camera viewfinder interface. |  |

Knock

This gesture is applicable to machines that support knuckle gestures.

|  |  |

| Knock firmly with your knuckle and draw a closed pattern to take a screenshot of the screen area in the pattern. | Knock twice firmly with your knuckle to take a screenshot. | Double tap the screen with your two knuckles to start or stop screen recording. |

Previous

Next

Cursor-based Interaction

Last updated: 2021-11-04 12:52

When a user indirectly interacts with an app using a pointing device (such as a mouse, a touchpad, an AR/VR handle, or an air gesture), an object to which the cursor points and the cursor itself must be visually appropriate to deliver the interactivity of the object and the accuracy of arrival. In addition, the cursor must support refined operations and hovering state (compared with finger-touch) to improve app productivity, simplify interactive tasks, and optimize information display.

This section introduces specifications that are applicable to scenarios where the mouse and touchpad are used to operate the cursor. The basic design principles can be also applied to AR/VR handles and air gestures.

Cursor Form

The design of the cursor form complies with the following principles:

Function expression

When the cursor hovers over a specific interface object or area, the standard cursor form set defined by the system can be used to express the interaction status.

Apps can also customize the form of the cursor based on their specific scenarios (such as drawing and dragging).

Simplicity

To ensure the consistency of global interaction experience, the cursor form set defined by the system is preferentially used. Do not add new cursor forms if not necessary.

The custom cursor form must be as concise and direct as possible to show the operations that can be performed by users and deliver the required precision in the current interface status, and should not be too distracting.

Automatic hiding and showing

For a terminal device mainly featuring touch-based interaction, if the cursor does not move within a period of time, or the user suddenly switches from the mouse-based interaction to the touch-based interaction, the cursor is automatically hidden to ensure cleanness and neatness of an interface.

Form of a Hovered Object

When the cursor hovers over an interface element, the element must be able to respond with appropriate visual display to guide users to move the focus, thereby improving the pointing accuracy.

The interface element needs to respond to the cursor hovering action to clearly express the interactivity of the element.

Users perform operations by moving the cursor to the target interface elements. Users must be told whether an interface element is interactive immediately the cursor hovers over it, to avoid frustration caused by failure of interaction when clicking.

When expressing the interactivity of interface elements, consider the following design principles:

- Visibility: The interface elements in the hovering state must be more attractive than those in the common state and other interface elements.

- Subtlety: The interface elements must not be visually over-emphasized or greatly different from those in the normal state to avoid distracting users’ attention.

- Consistency: The interactive expressions (visual and animation effects) of controls of the same type must be consistent. When the application scenario is extended from tablets/PCs to TVs and AR/VR, the hovering state of controls on different devices, to some extent, must be consistent.

In the hovering state, the interface elements must express their interactive hotspots.

There may be many elements in a graphical interface, and a visible part of the graphical interface is quite different from a hotspot that can be actually used (touched) for interaction. In such a scenario, when the cursor hovers over an element, accurate expressing of an interactive hotspot helps the user locate an effective trigger region of the element, thereby improving interaction efficiency.

Hovering effect types of a UI element

HarmonyOS provides two types of hovering effects for UI elements: floating and highlight.

- Floating: When the cursor enters an object, the object is zoomed in. When the cursor leaves the object, the object size is restored. An animation can be used to present the change in the object size to give users smooth experience. The floating effect is applicable to components whose visible parts are of the similar size to the actual hotspot, having a background, and not adjacent to other elements in the layout. Typical components include buttons, navigation dots, and checkboxes.

- Highlight: When the cursor enters an object, a color block appears at the bottom layer of the object. There are two types of highlight effects based on the component type:

- For components whose visible parts differ greatly from the actual interactive hotspot: When the cursor enters a component, a light-colored rounded rectangle is added to the bottom layer of the component to better show the hotspot. This effect applies to components that have no background, such as text buttons, toolbars, and tabs.

- For container components with obvious boundaries, such as lists, cards, and menus: When the cursor enters a component, the color of the component backplane changes.

Displaying Additional Information

When you move the cursor to an interface element, additional information can be displayed in the following scenarios:

- Restricted content preview: For example, when the cursor is hovered over the content list or grid in apps such as email, calendar, and memo, more content details can be displayed in a floating window for users to quickly preview and select.

- Precise location display: For example, in screenshot, design, and office apps, when the cursor hovers over some objects, values such as the location and size are displayed, facilitating refined control.

- Control function prompt: If the function of an interface element is not clear, a text prompt can be used to display detailed explanation.

Exercise caution when using the function of displaying additional information. The additional information displayed must be necessary or can significantly improve user experience. Before using the function of displaying the additional information, the clarity, conciseness, and expressiveness of the interface must be preferentially considered, so that users can easily and comfortably use the app no matter whether they employ the cursor input mode or directly touch with fingers to interact with the app.

Refined Operations

An important feature of cursor-based interaction that is different from touch-based interaction is that the cursor supports refined operations. Designers can consider how to use this feature to simplify interaction tasks and improve productivity with specific cursor forms.

Cursor Movement

The display and control ratio of cursor movement should be optimized to improve the speed and accuracy of cursor pointing. When a user points to an object by using a cursor, there are two stages: ballistic acceleration and deceleration correction. Use the mouse as an example. In the ballistic acceleration stage, when the focus is far away from the target, the user quickly moves the mouse to shorten the distance between the cursor and the object. In this case, the speed is more important than the precision control. In the deceleration correction phase, when the cursor is close to the object, the user reduces the moving speed, and more carefully targets the object. In this case, precision control is more important than acceleration.

Previous

Next

Focus-based Interaction

Last updated: 2021-11-04 12:52

When a user indirectly interacts with an app by using input modes such as a keyboard, a television remote control, or a vehicle-mounted joystick/knob, focus-based interaction is an important input means.

This section describes the general design principles of focus-based interaction. For details about the focus-based interaction means for each input mode, see the specific sections of each input mode.

| Function | Device Type | Tablet & PC | TV | Head Unit | ||

| Operation Description and Buttons | Standard Keypad | Remote Control | Knob | Touchpad/Arrow Key | Joystick | |

| Navigation Focus | Move to the next focus. | Tab | N/A | Rotate clockwise | N/A | N/A |

| Move to the last focus. | Shift+Tab | N/A | Rotate counterclockwise. | N/A | N/A | |

| Move to the next focus by direction. | Left/Right/Up/Down | Arrow keys/Touchpad sliding | N/A | Arrow keys/Touchpad | Move by direction. | |

| Move to the first focus in the area. | Home | N/A | N/A | N/A | N/A | |

| Move to the last focus in the area. | End | N/A | N/A | N/A | N/A | |

| Previous/Next | Page up/Page down | N/A | N/A | N/A | N/A | |

| Focus Interaction | Activate the current focus. | Space | OK button | OK button | OK button | OK button |

| Enter the current focus. | Enter | OK button | OK button | OK button | OK button | |

| Cancel the current operation or return to the previous menu. | Esc | Back button | Back button | Back button | Back button | |

| Open the context menu of the current item. | Menu key/shortcut key combination | Menu | N/A | N/A | N/A |

Basic Principles

Built-in support

Focus movement is supported by the native attributes of controls. Designers and developers can determine whether to obtain the focus and adjust the focus sequence based on the application scenario.

Initial position

The initial focus position needs to be clear and highlighted so that users can effectively identify the position and start focus movement.

The default position of the focus is related to the interface level and content. The rules for determining the default position are as follows:

- The interface at the top level is preferred.

- Core operations are preferred. The default operation is determined based on the services provided by the app.

- Content is preferred. The content is arranged from top to bottom and from left to right.

- The focus is not displayed by default in the area where the loading is not complete.

Traversable

To complete all interaction tasks, the focus must be able to traverse all interface elements that can obtain the focus to ensure the integrity of the function.

Obtaining the focus by area to improve the focus efficiency

On the interface that consists of multiple components and can be obviously divided into several areas, the number of tab stops can be reduced to improve the focus efficiency.

Similarities and differences between tab stops and common focus (use keyboard as an example):

- Tab stop: You can press Tab to obtain the focused element. Generally, the first item of an area is the tab stop of the area.

- Common focus: The focus is not in the focus movement sequence of the tab but can be obtained by moving the arrow keys in the area.

In another typical scenario, the automobile head unit uses the knob and joystick/arrow keys to quickly move the focus. For example, you can use the joystick or arrow keys to switch between pages, levels, and blocks, and use the knob to switch between clickable elements.

Whether to enable area-based focus movement should be determined by the input modes. If the tab key or its equivalent key is not supported, the area-based focus movement cannot be enabled. In this case, only a single focus can traverse interface elements (the number of tab stops is the same as the number of focusable elements).

Focus Movement Sequence

Based on the forms of the UIs and the input modes, there are three types of focus movement scenarios: move focus in an absolute sequence; move focus in a relative direction; and move a caret.

Moving focus in an absolute sequence

The focus movement sequence is determined in advance. All the focusable elements are arranged in the sequence displayed on the screen or in the specified sequence. For example, when you use the keyboard, press Tab to select the next focus, and press Shift+Tab to select the previous focus.

When designing an app, you can reorganize the focus sequence based on rules such as function partitioning and visual presentation.

Basic principles:

- Users can move the focus only in a specified sequence.

- Two operation sequences are provided: forward and reverse (for example, Tab and Shift+Tab on the keyboard, and right and left rotation of the knob).

- Generally, the focus list is connected from the beginning to the end.

Moving focus in a relative direction

Move focus according to the distribution of focusable elements. There are different kinds of focus movement sequences.

After a user enters the direction information, the system calculates the elements that should be focused in the specified visual direction by using an algorithm. Typical scenarios include using the arrow keys on the remote control to interact with a TV.

Shared bottom-layer navigation mechanism:

- The basic operation logic is the same regardless of whether the input mode is a keyboard, remote control, touchpad, or joystick.

- Different navigation strategies will affect a user’s choice of the next focus when pressing the down arrow key.

Direction-based and distance-based identification methods:

- Receive the input by keys from users.

- Extend the current focus edge in the specified direction.

- The first one that can be projected to the extended region is the target focus position.

- If there are multiple candidate focuses, select the one with the shortest projection distance.

- If there are still multiple candidate focuses, filter them from top to bottom and left to right.

Combining the relative sequential focus movement with the absolute sequential focus movement

This type of navigation design has the following two purposes:

- Improve the operation efficiency. Absolute sequential focus movement can traverse all interactive controls. The efficiency is low when there are a large number of elements.

- Make the focus movement sequence more logical. Partitions are organized based on functions, and the logical sequence is matched with the visual partition, making it easier for users to learn.

Optimization strategy 1:

Navigation using arrow keys is supported in the relative direction area. As shown in the preceding figure, right-click to move the focus from B to C, and left-click to move C to B. The arrow keys can be used only within the current area and cannot be used to exist the area. For example, when the focus is on C, the system does not respond if you press the right arrow key.

Optimization strategy 2:

A default tab sequence is specified for each area. You can press Tab to quickly switch between areas. For example, if TabIndex of area A is set to 1, TabIndex of area B to 2, and TabIndex of area D to 3, the focus movement sequence is A > B > D, instead of traversing all, you can use the arrow keys to traverse each area, which is a more efficient fusion focus movement mode.

Moving a caret

When we process test, carets are often used to guide operations, and arrow keys are used to perform movement and selection on text processing apps, table apps, or controls. Carets are usually used together with common text operation shortcut keys.

Other precautions

For a system that supports touch-based interaction or cursor-based interaction, the focus is displayed only when the system detects that the user is using a specific input mode to move the focus, to avoid visual interference.

In the focus movement operation state, if it is detected that the user switches the input mode to the touch-based interaction or the cursor-based interaction, the visual display of the focus on the interface should be automatically hidden.

Previous

Next

Mouse

Last updated: 2021-11-04 12:52

A mouse is a typical cursor-based pointing input mode with pixel-level precision. It is most suitable for productivity apps and high-density UI scenarios that require high precision for user interaction.

Generally, a mouse is composed of a left button, a right button, and a scroll wheel. These buttons should provide standard industry functions and comply with user habits. The mouse can also be used together with keyboard keys to provide users with extra shortcut operation experience. System-level mouse interactions are described in the following tables:

| Operation | Expected Function |

|---|---|

| Move | The cursor can be moved, and displayed and expressed in the hovering state on different interface elements. For details, see Cursor-based Interaction. |

| Left click | The main function of a project can be selected or started (for example, click a button to start an app or run a command). |

| Right click | The context menu can be displayed (corresponding to touch-and-hold on the touchscreen). |

| Slide the scroll wheel | The page content can be moved by sliding up, down, left, and right. |

| Left click and move | Objects in a certain area can be dragged and selected. |

| Hold down Ctrl and slide the scroll wheel | Objects can be zoomed in or out. |

Similarities and Differences Between the Mouse Interaction and Touch Interaction

In many scenarios, an app needs to support both touch interaction and mouse interaction.

Finger touch interaction simulates the capability of directly operating interface objects through physical gestures (such as slide and rotate). Due to the nature of indirect interaction and the feature of only one cursor response location, the mouse interaction is not completely equal to the touch interaction in some tasks.

- When selecting or launching an object, a left-click with a mouse is considered equal to a finger-touch on a touchscreen.

- In other scenarios, apps need to be optimized to adapt to mouse interaction to improve productivity and ensure user experience consistency.For example, the context menu should be quickly triggered by right-clicking rather than by a long left-button pressing or a long-finger pressing on the touchscreen. When you drag an object, you can click the object to drag it without pressing and holding it. Use the scroll wheel to slide or switch the pages, instead of moving the mouse in a certain direction after pressing the left button (this is an inefficient and uncontrollable operation for some users).

For details about the mapping and switching between touch interaction and mouse interaction in specific scenarios, see Interaction Events Unification.

For details about the hovering status of the cursor and interface objects, see Cursor-based Interaction.

Previous

Next

Touchpad

Last updated: 2021-11-04 12:52

A touchpad has both a multi-finger touch gesture input (touchscreen) feature and a refined pointing input (mouse) feature. Therefore, the touchpad is not only applicable to user interfaces that are optimized based on touch interaction, but also applicable to productivity apps that have relatively high requirements on pointing precision.

When designing or adapting the touchpad interaction for your apps, the touchpad interaction should comply with the user habits (when using a touchpad, users always stare at the screen instead of the touchpad) and follow the following principles:

- The touchpad can replace the mouse.

- Operations can be performed by corresponding gestures on the touchscreen.

| Operation | Expected Function |

|---|---|

| Tap with a finger and move | The cursor can be moved, and displayed and expressed in the hovering state on different interface elements. For details, see Cursor-based Interaction. |

| Tap with one finger | The main function of a project can be selected or started (for example, click a button to start an app or run a command). |

| Touch with two fingers | The context menu can be displayed (corresponding to touch-and-hold on the touchscreen and right-click with a mouse). |

| Tap with two fingers and move | The page content can be moved in a specified direction (corresponding to the single-finger-slide on the touchscreen). |

| Press with one finger and move | Objects in a certain area can be dragged and selected. |

| Move two fingers apart and pinch | Objects can be zoomed out or in. |

| Rotate with two fingers | Objects can be rotated. |

| Operate with multiple fingers | System navigation |

For details about the mapping and switching between touch interaction and touchpad interaction in specific scenarios, see Interaction Events Unification.

For details about the hovering status of the cursor and interface objects, see Cursor-based Interaction.

Previous

Next

Keyboard

Last updated: 2021-11-04 12:52

A keyboard is an important productivity input mode. An excellent keyboard usage experience allows users to quickly and accurately enter text, navigate within the system and apps without leaving the keyboard, access all functions, and supports accessibility experience.

Focus Navigation

For details about the design principles of the focus navigation and interaction mode, focus division, and focus moving sequence, see Focus-based Interaction.

Basic and Standard Shortcut Keys

Necessary shortcut keys should be provided based on application scenarios (for example, shortcut keys for navigation as well as cutting, copying, and pasting). The basic design principles are as follows:

- Traditional PCs are used as the compatible objects, which can be tailored based on device conditions and application scenarios. Traditional PCs have the largest user base, and the user habits are hard to change. However, the shortcut key combinations that are too complex, seldom used, or not supported by the devices should be removed.

- New shortcut keys are supported. A direct entry is added for Huawei-specific apps or functions (such as split-screen and multi-terminal).

- Shortcut keys are added for touchscreen gestures on the integrated PC to improve productivity (for example, opening the notification bar, control center, and displaying/hiding the dock).

| Type | Operation Description | Shortcut Key | |

|---|---|---|---|

| Global shortcut keys | System functions | Home/Display desktop. | LOGO+D / LOGO+H |

| Back/Go to the upper level. | ESC / LOGO+Backspace | ||

| Recent/Switch the task window. | LOGO+Tab / Alt+Tab | ||

| Open the notification center. | LOGO+N | ||

| Lock screen. | LOGO+L | ||

| Search. | LOGO+S / Ctrl+F | ||

| Switch between languages/input methods. | LOGO+Space | ||

| Take a screenshot. | LOGO+Shift+S | ||

| Switch to multi-window. | LOGO+X | ||

| Switch between full-screen and multi-window modes. | LOGO+W | ||

| Keypad focus moving | Move the focus to the next or previous focus control. | Tab / Shift+Tab | |

| Move the focus or cursor in a specified direction. | Arrow keys | ||

| Switch the status of the selected check boxes, radio button, and switch controls. | Space | ||

| Activate the focused button or menu item. | Enter | ||

| Common operations | Cut the selected content to the clipboard. | Ctrl+X | |

| Copy the selected content to the clipboard. | Ctrl+C | ||

| Paste the content on the clipboard to the current position. | Ctrl+V | ||

| Undo the last operation. | Ctrl+Z | ||

| Select all items in the current focus control or window. | Ctrl+A | ||

| Open the context menu of the selected item/Activate multiple selections. | LOGO+Shift+C | ||

| Advanced operations | Format | Bold | Ctrl+B |

| Underscore | Ctrl+U | ||

| Italics | Ctrl+I | ||

| System functions | Close the current focus window. | Alt+F4 / Ctrl+W | |

| Search. | Ctrl+F | ||

| Display the context menu of the selected objects. | Shift+F10 / Menu | ||

| Print. | Ctrl+P | ||

| Web browser | Select and focus on the address bar. | Ctrl+L/Alt+D | |

| Refresh. | Fn+F5 / Ctrl+R | ||

| Tab-related operations | Create a tab. | Ctrl+T | |

| Close the current tab. | Ctrl+W | ||

| Common operations | Redo the last operation. | Ctrl+Y | |

| Perform special pasting. | Ctrl+Shift+V | ||

| High-level operations | System functions | Create a folder. | Ctrl+Shift+N |

| Open the app menu. | LOGO | ||

| Log out of the current user. | Ctrl+Shift+Q | ||

| Open the task manager. | LOGO+Esc | ||

| Rename the selected object. | F2 / Enter | ||

| Text editing | Delete the phrase to the left of the cursor. | Ctrl+Backspace | |

| Go to the head of the line. | Home / Fn+← | ||

| Go to the end of the line. | End / Fn+→ | ||

| Go to the beginning of the document. | Ctrl+Home / Ctrl+↑ | ||

| Go to the end of the document. | Ctrl+End / Ctrl+↓ | ||

| Go the previous word. | Ctrl+← | ||

| Go the next word. | Ctrl+→ | ||

| Go to the previous line. | ↑ | ||

| Go to the next line. | ↓ | ||

| Go to the next result. | F3 / Ctrl+G | ||

| Go to the previous result. | Shift+F3 / Shift+Ctrl+G | ||

| Resource manager | Go to the address bar. | Ctrl+L/Alt+D | |

| Go to the previous position in history. | Alt+← | ||

| Go to the next place in history. | Alt+→ | ||

| Go to the start page (defined by the user or app). | Alt+Home | ||

| Web browser | Add “www.”+”.com” to the URL. | Ctrl+Enter | |

| Add the current page as a bookmark. | Ctrl+D | ||

| Select and focus on the search bar. | Ctrl+E / Ctrl+K | ||

| Refresh (ignore cache). | Ctrl+F5 / Ctrl+Shift+R | ||

| Tab-related operations | Go to the next tab. | Ctrl+Tab | |

| Go to the previous tab. | Ctrl+Shift+Tab | ||

| Go to the n tab. | Ctrl+n (n indicates the tab position. The maximum value is 9.) | ||

| Reopen the closed tab page. | Ctrl+Shift+T | ||

| Window operations | Close all windows of the current app. | Alt+Shift+F4 or Ctrl+Shift+W | |

| Exit the app in the current window. | Ctrl+Q | ||

| Open an app on the dock. | LOGO+# (# indicates the position of the program in the Dock. The maximum value is 9.) | ||

| Controls and widgets | Display the tool prompt of the current control. | Shift+F1 | |

| Display help information about the current control or window. | Shift+F1 | ||

| Move the focus to the next or previous pane. | Ctrl+F6 / Alt+F6 | ||

| Move the focus to the menu bar of the window. | F10 / Alt | ||

| Select/Move to the first/last project in the selected widget. | Home/End | ||

| Accessibility | Allow users to enter one key at a time when using shortcut keys. | Press Shift for five consecutive times. | |

| Stop or slow down the speed of repeated characters when a key is pressed. | Press and hold the Shift key on the right for 8 seconds. |

Customizing Shortcut Keys

In some scenarios, users may be more accustomed to using the keyboard to access the system and app functions. For example, in productivity software, keyboard shortcuts are used to improve efficiency, and some people with disabilities prefer to use the keyboard for interaction. Therefore, ensure that the core functions of the app can be accessed through the keyboard.

- Users can use keyboard shortcuts to access functions in menus: Keyboard shortcuts are often associated with functions in app menus. Users can use keyboard shortcuts to access these functions. For example, you can use shortcut keys to select all, delete, copy, and share photos in Gallery.

- Keyboard shortcuts for other core functions in the app are provided: Some common functions in the app do not have corresponding menu items and require corresponding shortcut keys. These functions usually correspond to direct operations on controls. For example, on a chat page, the Enter key should be associated with the clicking of the send button. In this way, a user can press Enter to send a message after entering the message content in the text box.

- Compatible with standard system shortcut keys: In all apps, the design of shortcut keys for standard functions must be consistent with the definitions of standard system shortcut keys. On the other hand, avoid redefining the functions of standard shortcut keys in apps. Otherwise, users may be confused.

Previous

Next

Stylus

Last updated: 2021-11-04 12:52

On the touchscreen, the stylus is an extension of the fine finger operation and is a pixel-level precision pointing device. The stylus provides a direct, natural way to write, draw, and annotate digital content. Currently, there are two types of stylus pens: with physical buttons and without physical buttons. The system-level, consistent interaction is supported.

With Physical Buttons

| Operation | Expected Function |

|---|---|

| Press and hold the function key to draw an “S” shape | Takes long screenshots. |

| Press and hold the function key to draw a closed figure | Takes partial screenshot. |

| Press and hold the function key while clicking the screen | Opens the Memo when tapping the stylus against the screen while your device is asleep. |

| Press and hold the function button and the screen | Intelligently identifies the content on the screen. |

| Press and hold the function key to draw a straight line (in the middle of the screen) | Enables the split-screen function. |

Without Physical Button

| Operation | Expected Function |

|---|---|

| Tap the screen with the stylus | Quickly open a note when the screen is asleep (This function can be used only after the double-tap to turn on the screen function is enabled by default). |

Opening Hand-Drawing Suite

Pencil Engine integrates the handwriting effect capability as a plug-in to create more handwriting application scenarios for app developers. Multiple types of brushes (ball pen, pen, pencil, and marker) and multi-tone color plate are supported. You can set the brush color, thickness, undoing, restoration, erasure, lasso, and other handwriting editing capabilities. The app uses multiple brushes to meet users’ daily requirements for notes, drawings, and graffiti, achieving good writing experience.

Application scenario: Pencil Engine can be quickly integrated with apps. You can insert a handwriting entry (for example, adding a drawing board or annotation) into an app, access Pencil Engine to edit a handwriting painting, and save as well as re-edit the drawing.

Previous

Next

Air Gestures

Last updated: 2021-11-04 12:52

Air gestures (non-touch gestures) are a new manner of interaction between a person and a device. They allow a user to conveniently interact with devices without holding or touching them. Air gestures apply to devices such as mobile phones, tablets, automobile head units, smart TVs, speakers, and AR/VR devices. An air gesture is usually a simple action that conforms to the intuition and cultural habits of a user.

Design Principles

Using metaphors to establish relationships

Establish a relationship between a function and a gesture that convey the similar meaning so that users can better accept and remember the gesture.

Extending existing interaction habits

Extend existing interaction habits of users to generate new interaction methods that are easy to learn and remember.

Respecting the cultures of different countries/regions and avoiding taboos

Avoid gesture taboos in any of your target markets. Gesture functions should be consistent with users’ expectations so that the gestures are easy to be accepted by users.

Avoiding exhausting gestures

Avoid time-consuming and refined gestures. A long-standing hover makes a user feel tired. The fatigue of a gesture increases in the following sequence: palm gesture, small arm gesture, and large arm gesture.

Common Air Gestures

Air gestures are generally used to access frequently used functions. The following table lists the most frequently used HarmonyOS functions and their air gestures.

| Operation | Description | Legend |

|---|---|---|

| Swiping left or right | Function: Swipe left or right to switch between pages, music, or pictures. Gesture: Swipe left or right over the screen with your palm or back of the hand. |  |

| Swiping up or down | Function: Swipe up or down to switch content. Gesture: Swipe up or down over the screen with your palm or back of the hand. |  |

| Taking a screenshot | Function: Take a screenshot of the entire screen. Gesture: Make a fist straight in front of the screen. |  |

| Mute | Function: Mute or unmute the system volume. Gesture: Face the screen and place the forefinger near the middle of the lip. |  |

| Performing an operation | Function: Perform an operation in a certain scenario, such as playing/pausing a video and answering a call. Gesture: Press forward with your palm. |  |

| Dragging up or down | Function: Adjust the volume. Gesture: Pinch the thumb with forefinger and move up or down. |  |

| Dragging left or right | Function: Fast forward or rewind media content. Gesture: Pinch the thumb with forefinger and move left or right. |  |

Previous

Next

Interaction Events Unification

Last updated: 2021-11-04 12:52

This section describes the correct interaction rules for apps on touchscreens and other common input modes (mouses, touchpads, and keyboards) in multiple interaction tasks or scenarios. Designers and developers should ensure that the app responds according to the interaction rules correctly and based on user habits in the current input mode.

Opening/Switching Objects

Application scenarios

A user can click an element to trigger a function, access a new page, or change the status.

| User Input | Interactive Behavior | Legend |

|---|---|---|

| Touchscreen | Tap with one finger |  |

| Mouse | Click the left button/Double-click the left button. |  |

| Touchpad | Tap/Double tap with a finger |  |

| Keyboard | Move the cursor to an object and press Enter. |  |

Generally, a press/release operation using a finger on a touchscreen corresponds to that using a mouse.

In some special scenarios, there may be an interaction solution in which a user double-clicks an object by using a mouse/touchpad, for example, open a desktop app or a file in a computer mode. In this case, the app needs to perform special processing. In addition, the same function cannot support both the click and double-click interaction modes.

Displaying the Menu

Application scenarios

A pop-up menu or shortcut menu is displayed on an element.

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Single-finger press and hold. |

| Mouse | Right-click (the same as that on the PC) or press and hold the left mouse button (the touch habit unchanged). |

| Touchpad | Light/tight tap with two fingers (the same as that on the PC) or press and hold tightly with one finger (the same as that on the touchscreen). |

| Keyboard | N/A |

The menu here refers to a temporary pop-up window used to display operations that can be performed by a user.

All menus displayed by pressing and holding on the touchscreen need to support the triggering mode of right-clicking with the mouse and clicking with two fingers on the touchpad.

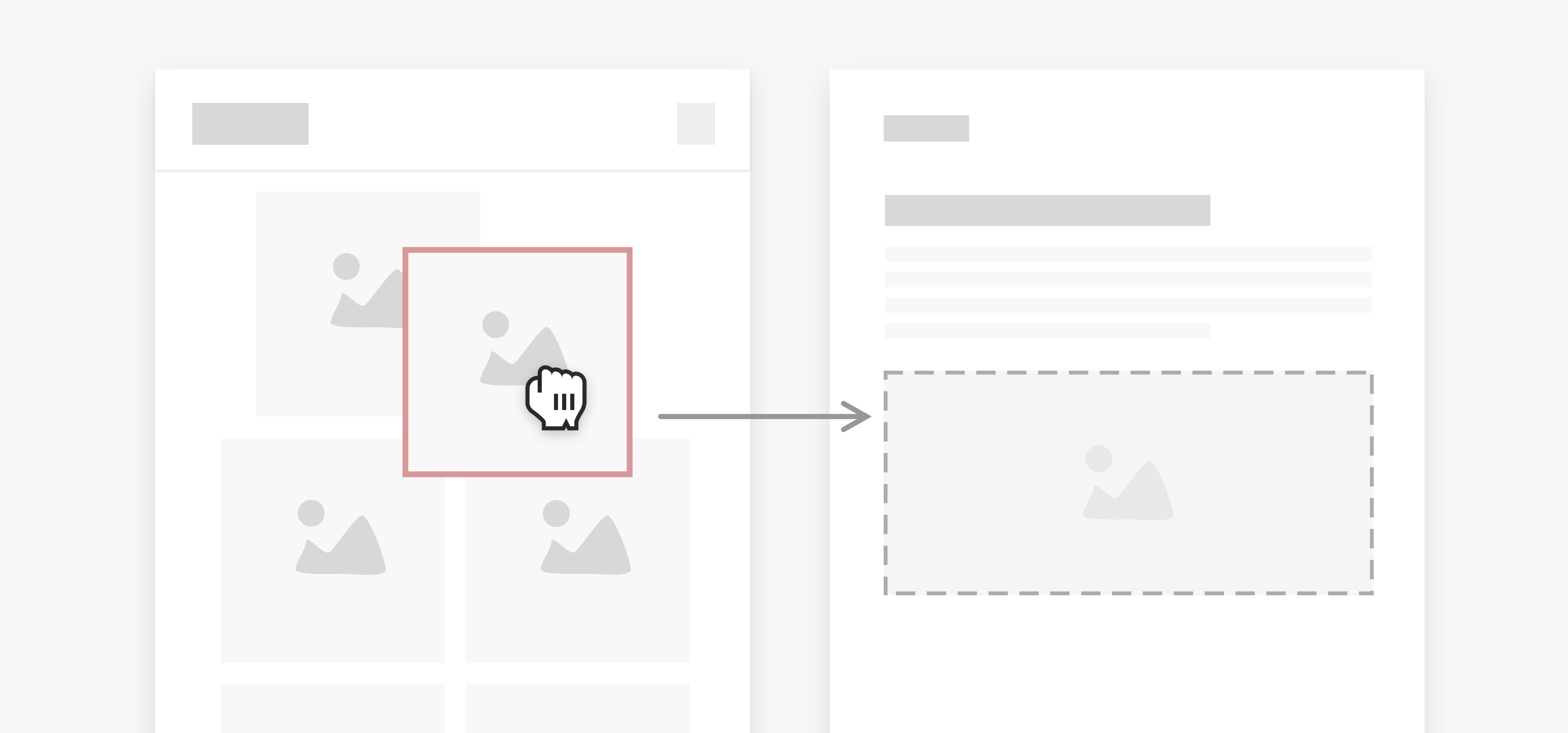

Selecting Objects

Application scenarios

A user can touch and hold on the touchscreen to select multiple items. This function is generally used on the list or grid screen.

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Single-finger press and hold. |

| Mouse | Right click or press and hold the left mouse button (the touch habit unchanged). |

| Touchpad | Light/tight tap with two fingers or press and hold tightly with one finger (the same as that on the touchscreen). |

| Keyboard | Press Ctrl and click the cursor. |

Use the mouse and touchpad to select an item to display the context menu (optional).

Sliding Objects

Scenario 1: continuous scrolling of lists and pages

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Swipe after touching the screen. |

| Mouse | When the scroll wheel scrolls upwards, the page scrolls upwards. When the scroll wheel scrolls downwards, the page scrolls downwards. Each time the scroll wheel scrolls a notch, the page scrolls for a certain distance. The default value is 64vp. The value can also be set by the app. |

| Touchpad | The double-finger sliding behavior on the touchpad is the same as the single-finger sliding behavior on the touchscreen. When swiping up with two fingers, the page scrolls down. When swiping downward with two fingers, the page scrolls up. When you slide with two fingers, the page scrolls in a fine and continuous manner. When the two fingers leave the touchpad, the page continues to slow down and slide according to the speed at which they leave the touchpad until it stops. If the list is horizontal, swipe left and scroll right. Swipe right with two fingers until the page scrolls to the left. |

| Keyboard | When an item in the list is focused, press the down arrow key to move the focus to the item in the list. If the list items in the lower part are not completely displayed or are not displayed on the current page, the list is scrolled down when the focus is obtained so that the list items can be completely displayed. When the focus is being moved upwards, the interaction logic is the same. The only difference is that the direction is reverse. |

Scenario 2: horizontal/vertical pages/objects switching

Only one item is scrolled each time.

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Swipe left, right, up, or down on the screen once. |

| Mouse | Scroll the scroll wheel upward by one notch to switch to the previous item. Scroll the scroll wheel downwards by one notch to proceed to the next item. Typical scenario: When a small video is played, the upper and lower videos must not be stuck in the middle. |

| Touchpad | The double-finger sliding behavior on the touchpad is the same as the single-finger sliding behavior on the touchscreen. Vertical distribution: Swipe up on the screen with two fingers once to proceed to the next item. (Sliding from the touchpad to the finger is considered as one sliding operation.) Swipe down once with two fingers to go to the previous item. Typical scenario: small video playing Horizontal distribution Swipe left with two fingers once to switch to the right item. Swipe right once with two fingers to switch to the left item. Typical scenarios: Image switching during image browsing, card switching on the banner, and desktop navigation. |

| Keyboard | N/A |

Scenario 3: adjusting the slider

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Slide your finger left, right, up, or down along the sliding bar. |

| Mouse | After the cursor is moved to the slider, the value of the slider changes as follows: For the continuous slider, each time the scroll wheel scrolls by one notch, the value of the slider changes by one increment. For the continuous sliding block, each time the scroll wheel scrolls by one notch, a value of the sliding bar changes to a, where a is set by an app according to a specific scenario. You can scroll the mouse wheel to control the horizontal and vertical sliders. |

| Touchpad | The double-finger sliding behavior on the touchpad is the same as the single-finger sliding behavior on the touchscreen. After the cursor is moved to the slider, do as follows: For vertical slider: Slide up with two fingers to increase the slider value. Swipe down with two fingers to decrease the slider value. For horizontal slider: Slide right with two fingers to increase the slider value. Slide left with two fingers to decrease the slider value. Optimize the ratio of the sliding distance of two fingers on the touchpad to the value change of the sliding bar (display-control ratio) so that users can easily, quickly, and accurately adjust the value to the target value. |

| Keyboard | N/A |

Scenario 4: adjusting the sliding selector

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Swipe up or down after touching the picker |

| Mouse | After the cursor is moved to picker, the picker changes as follows: Each time the scroll wheel scrolls one notch upward, the picker scrolls one item, and the upper item replaces the current item. Each time the scroll wheel scrolls down by one notch, the picker scrolls one item, and the lower item replaces the current item. |

| Touchpad | The double-finger sliding behavior on the touchpad is the same as the single-finger sliding behavior on the touchscreen. After the cursor is moved to the slider, do as follows: Swipe up with two fingers to scroll the list. The lower items replace the upper items. Swipe down with two fingers to scroll the list. The upper items replace the lower items. Optimize the relationship between the sliding distance of two fingers on the touchpad and the picker’s scrolling behavior so that you can easily, quickly, and accurately adjust the target value. |

| Keyboard | N/A |

When the slider/picker is embedded in the sliding page and list, you can use the mouse and touchpad to slide. When you use the cursor to point to a device, ensure the compatibility between the slider/picker and the device.

In this case:

- Each time the cursor moves, the control to which the cursor responds is determined based on the cursor position.

- If the pointer is on the slider/picker before the sliding operation, the slider/picker is adjusted and the list is not scrolled.

- If the cursor is on the list before the sliding operation, the list is scrolled, but the slider/picker does not respond even if the cursor enters the valid range of the slider/picker during the list sliding.

- If the cursor position changes on the screen after a slide, the system performs judgment again.

Scenario 5: custom sliding

Other custom touch and slide behaviors, such as adjusting the volume or brightness and sliding back on the video play screen. The mouse and touchpad should also support the corresponding interaction under the condition that the operations do not conflict with each other.

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Swipe in a certain direction after touching the screen with your fingers. |

| Mouse | You can swipe your fingers vertically to perform the corresponding operations. After the cursor is moved to the object: Scrolling the mouse wheel upward corresponds to sliding the finger up on the touchscreen. Scrolling the mouse wheel down corresponds to sliding the finger down on the touchscreen. Typical scenario: adjusting the volume and brightness during video playback. |

| Touchpad | The double-finger sliding behavior on the touchpad is consistent with the single-finger sliding behavior on the touchscreen, and interaction corresponding to horizontal and vertical finger sliding is supported. After the cursor is moved to the object, you can Swiping up with two fingers on the touchpad corresponds to the operation of swiping up on the touchscreen. Swiping down with two fingers on the touchpad corresponds to the operation of swiping down on the touchscreen. Typical scenarios: adjusting the volume and brightness during video playback and sliding back. |

| Keyboard | N/A |

Optimize the relationship between the number of scrolls of the mouse wheel/the sliding distance of two fingers on the touchpad and the object behavior so that you can easily, quickly, and accurately control the object.

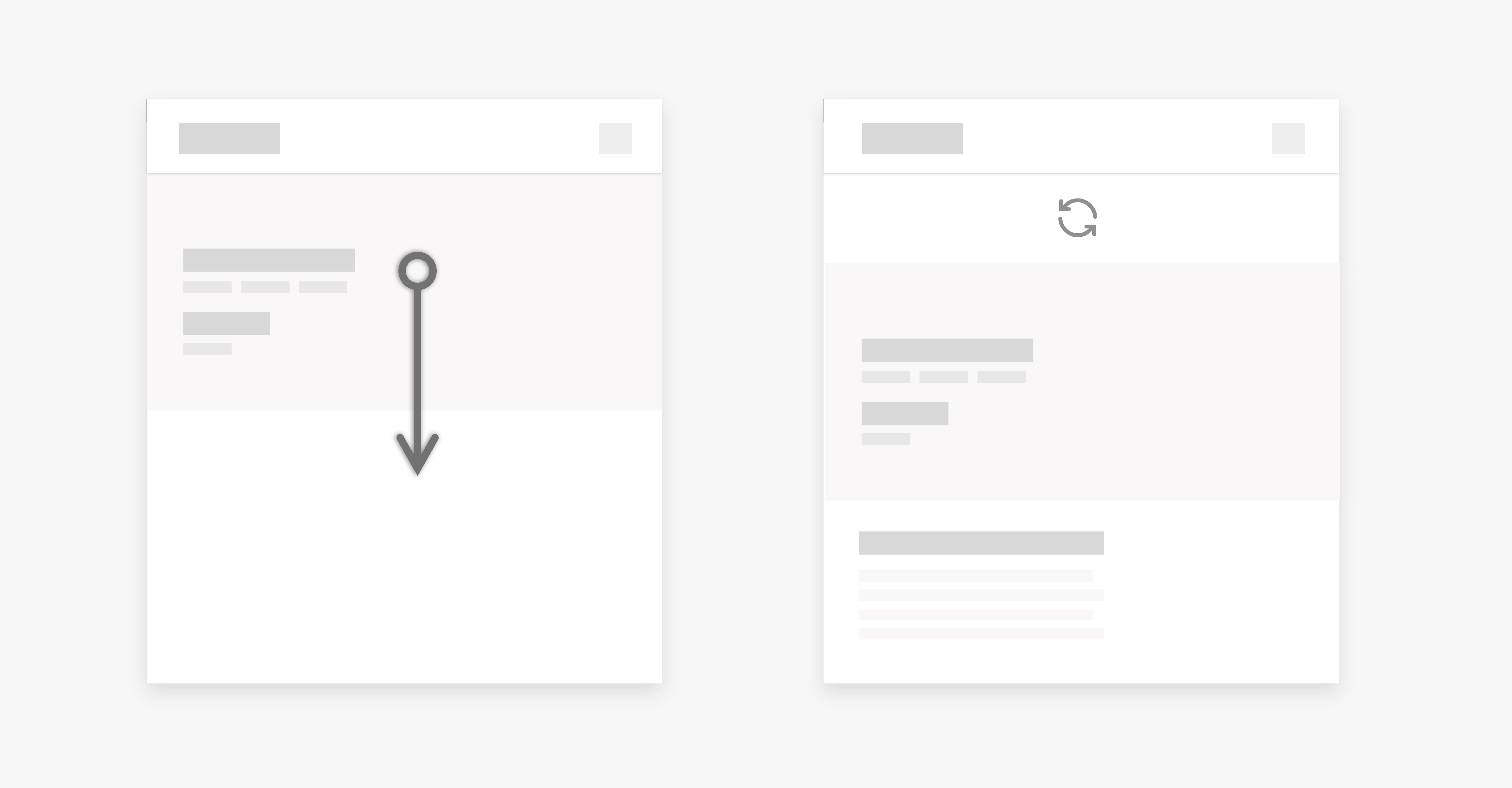

Refresh

Application scenarios

A user flicks down on the list or page to refresh the list or page.

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Swipe down on the screen for a certain distance and then release. |

| Mouse | When the list reaches the top, scrolling the mouse wheel again may cause a pull-down refresh. The condition for triggering the refresh is as follows: The number of consecutive scrolling times of the mouse wheel is no less than N, and the interval between any two scrolling events is less than T. Then an instruction is sent to refresh the list. Before a refresh process ends, the list does not respond to the scroll wheel scrolling events that start from the (N+1)th time. When the list returns to the original top, you can swipe down to refresh the list next time. |

| Touchpad | The double-finger sliding behavior on the touchpad is the same as the single-finger sliding behavior on the touchscreen. When the list reaches the top, sliding down with two fingers will continue to drag the list and display the “loading” animation. When the finger leaves the touchpad, if the pull-down distance is less than the specified distance, the finger will directly rebound after it is released. If the pull-down exceeds the specified distance, the refresh animation and app refresh event are triggered. |

| Keyboard | Ctrl+R |

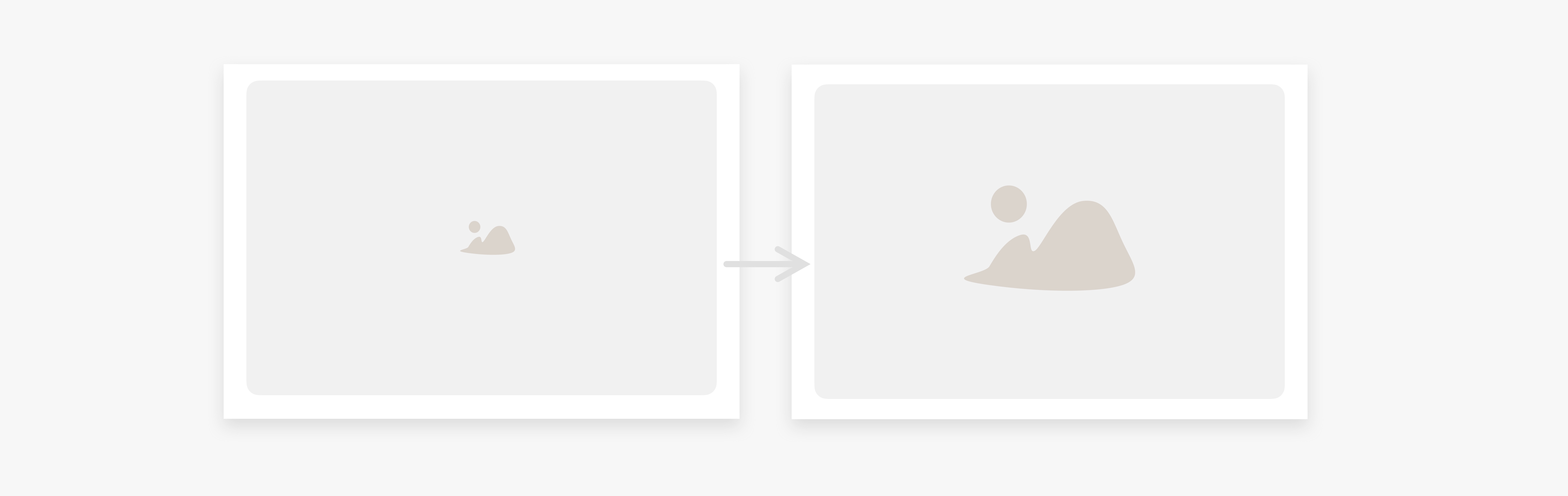

Zooming in or out an Object

Application scenarios

Adjust the image size when viewing an image.

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Use two fingers to extend the screen outwards to enlarge the content and fold the screen inwards to zoom in the content. |

| Mouse | You can press Ctrl and scroll the mouse wheel to zoom in or zoom out the content based on the cursor position. Scroll the mouse wheel up and down. Each time you scroll the mouse wheel by one notch, the object where the cursor locates is moved by N times with the cursor position as the center object. When the mouse scrolls down, the object is zoomed out to 1/N times of the current size each time a mouse wheel is scrolled. |

| Touchpad | The pinch behavior of two fingers on the touchpad is the same as that of two fingers on the touchscreen. When the cursor moves to an object, you can do the following: Touch the touchpad with two fingers and move them apart to zoom out the target object. Touch the touchpad with two fingers and move them towards each other to zoom in the target object. Optimize the display control ratio so that you can easily, quickly, and accurately adjust the target size. |

| Keyboard | Ctrl+plus sign (+): Zoom in an object by N times. Ctrl + minus key: Zooms out the object to 1/N times of the original size using the center point of the object. Ctrl+minus sign (-) |

Rotating an Object

Application scenarios

Rotate a picture when editing it.

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Two fingers rotate on the screen, and the object rotates with the screen. Touch the surface with two fingers and move the object clockwise or counterclockwise. |

| Mouse | N/A |

| Touchpad | The two-finger rotation behavior on the touchpad is the same as that on the touchscreen. When the cursor is moved to an object, you can: The touchpad rotates clockwise and the object rotates clockwise. Touch the touchpad with two fingers and move the object in the clockwise direction. The touchpad rotates counterclockwise, and the object rotates counterclockwise. Touch the touchpad with two fingers and move the object in the counterclockwise direction. |

| Keyboard | N/A |

In some scenarios, users must be allowed to touch the touchpad with two fingers to zoom in, zoom out, and rotate (for example, browse pictures or maps) objects at the same time.

Dragging an object

| User Input | Interactive Behavior |

|---|---|

| Touchscreen | Touch and hold an object to change the position of the object. |

| Mouse/Touchpad | You can drag an object by pressing the left mouse button or a single finger on the touchpad. (You do not need to press and hold the mouse button to wait for an object to be dragged.) |

| Keyboard | N/A |

Previous

Next

Basic Concepts

Last updated: 2021-11-04 12:52

Virtual Pixel Unit: vp

A virtual pixel is the virtual size (different from the pixel unit of the screen hardware) of a device for an application. It provides a flexible way to adapt to the display effects of different screen densities.

The use of virtual pixels allows elements to have a consistent visual volume on devices with different densities.

Font Pixel Unit: fp

By default, the font pixel size is the same as that of the virtual pixel size. That is, 1 fp = 1vp. If you select a larger font size in the settings, the actual font size is the virtual pixel size multiplied by the scale coefficient. That is, 1 fp = 1 vp * scale.

Previous

Interaction Events Unification

Next

Colors

Last updated: 2021-11-04 12:52

HarmonyOS’s inclusive color system is designed for everyone. We put thought and care into delivering a considerate experience to each and every user. Color itself is not good or bad, nor is it right or wrong. The colors in product design depend on how they are used. Just like the colors on a palette, masterpieces are created based on how a painter uses colors.

Brand Color

Colors impart vitality to the application interface and provides users with visual continuity. In addition, proper use of colors can convey key status information, provide instant status feedback to users, and present data visualization solutions.

Blue is the dominant color of HarmonyOS. According to human factors research, blue is the color with the highest acceptance rate in both male and female groups. In the world geographic dimension, blue is also the most popular color. More importantly, blue can still be identified for most people with color impairments. This meets HarmonyOS’s design requirements for those with color impairments.

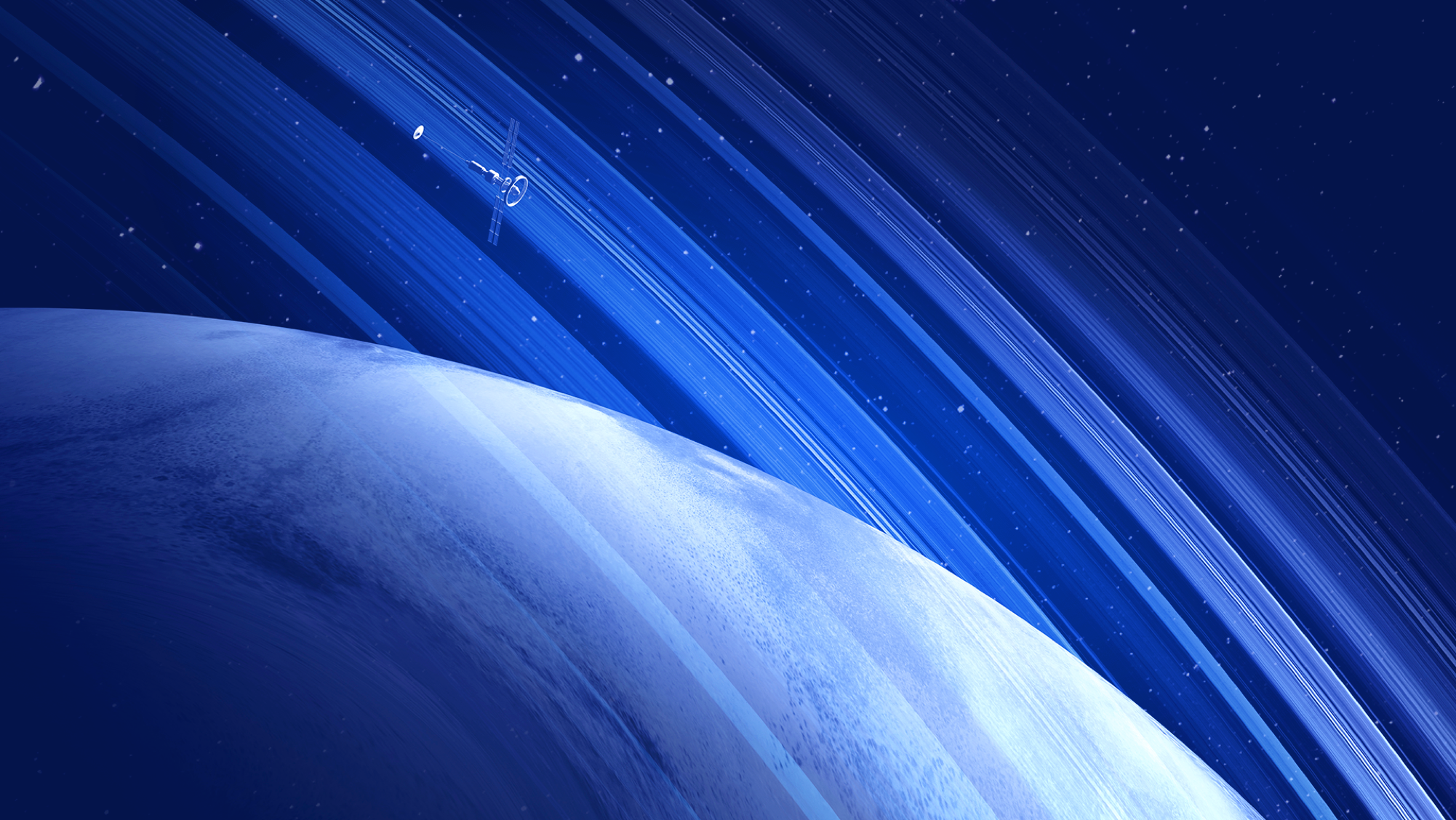

Cosmic Blue

We choose cosmic blue, a deeper and broader blue color, to symbolize the purity and tranquility of the beginning of the universe.

Color Values and Application Scenarios

In terms of color design, the unified color language is retained, and adjustment is made based on different application scenarios across multiple terminals, bringing customized user experience.

Mobile phone – light theme Used in the light theme on mobile phones for emphasis against the light background. Mobile phone – light theme Used in the light theme on mobile phones for emphasis against the light background. |  Mobile phone – dark theme Used in the dark theme on mobile phones for emphasis against the dark background. Mobile phone – dark theme Used in the dark theme on mobile phones for emphasis against the dark background. |  HUAWEI Vision Used in the dark theme on HUAWEI Vision. HUAWEI Vision Used in the dark theme on HUAWEI Vision. |  Smart wearables Used in the dark theme on wearables. Smart wearables Used in the dark theme on wearables. |

Snowy Gray

There is no absolute black or white in the nature. As such, a snowy gray color with a hue of light blue is used as the background color of the card interface to connote a feeling of purity.

Cosmic blue and snowy gray, like the sky and the earth, can be used together to make the entire interface look more clean and harmonious.

Snowy gray can be used as the background color of the card interface to convey a hierarchy of information or be used as the foreground color in the dark theme to reduce the contrast between the foreground and background and thereby reduce the visual strain.

Color values of snowy gray Color values of snowy gray |

Snowy gray as the background of the card interface Snowy gray as the background of the card interface |  Snowy gray as the focal color in the dark theme Snowy gray as the focal color in the dark theme |

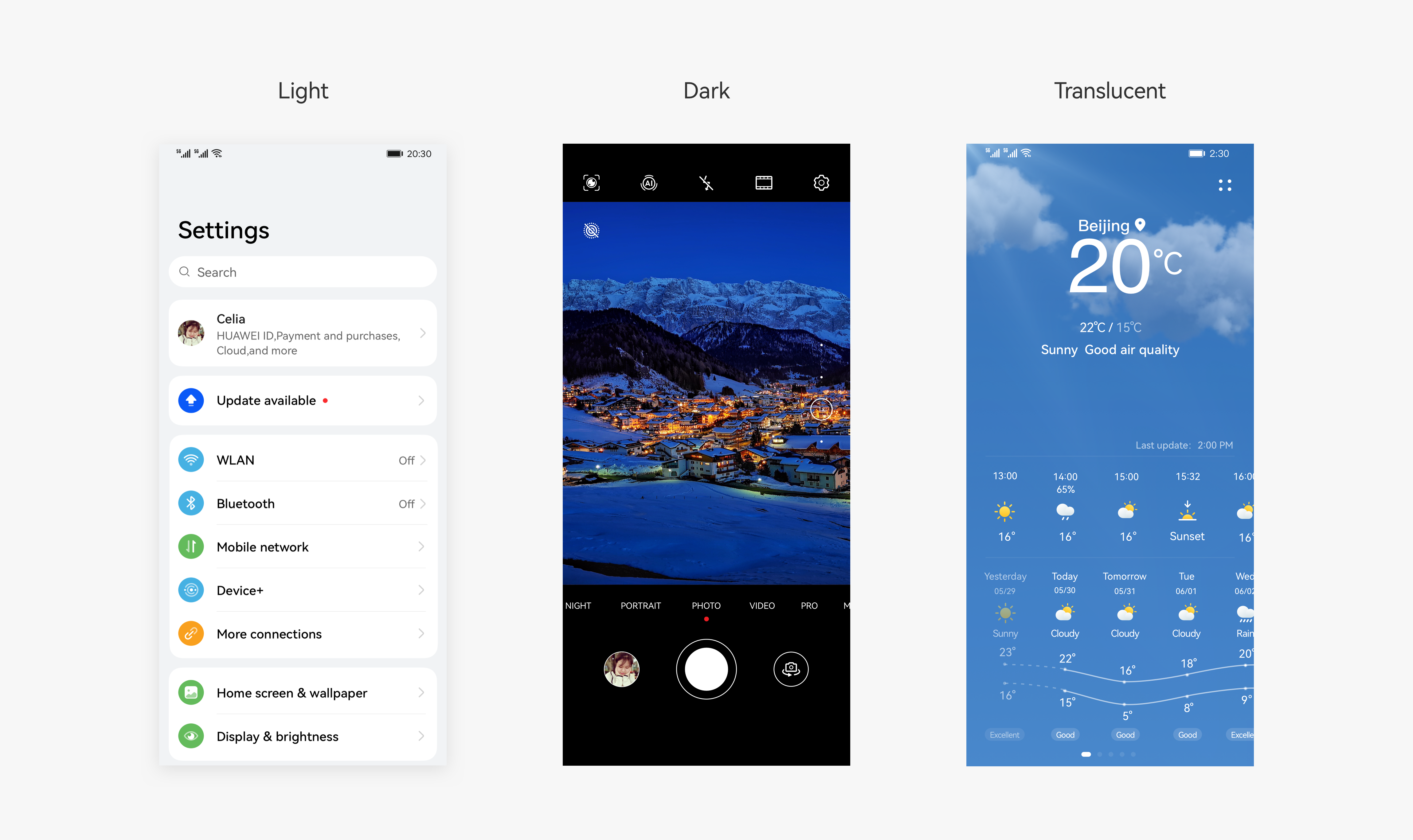

Themes

HarmonyOS offers three themes: light, dark, and translucent. An appropriate theme can be selected based on different devices and applications. For example:

- It is recommended that the light theme be used as the main theme and other themes be used as supplements on mobile phones. If necessary, users can also enable the dark mode to switch from the light theme of an application interface to the dark theme.

- The application scenarios of HUAWEI Vision are relatively fixed, and most of the videos are played indoors. As such, the dark theme is mainly selected as the theme style for large screens.

- Smart wearables mainly use the dark theme to reduce power consumption.

Three theme styles are supported for mobile phones.

HUAWEI Vision in the dark theme HUAWEI Vision in the dark theme |  Wearables in the dark theme Wearables in the dark theme |

Dark Mode

The dark theme meets more personalized requirements. A range of colors are specially chosen for the dark mode based on human factors research for comfortable viewing. If necessary, users can enable the dark mode to switch from the light theme of an application interface to the dark theme.

|  |

Related Links

- Color Guidelines for Mobile Phones/Foldables/Tablets

- Color Guidelines for HUAWEI Vision

- Color Guidelines for Wearables

Previous

Next

Fonts

Last updated: 2022-05-27 14:35

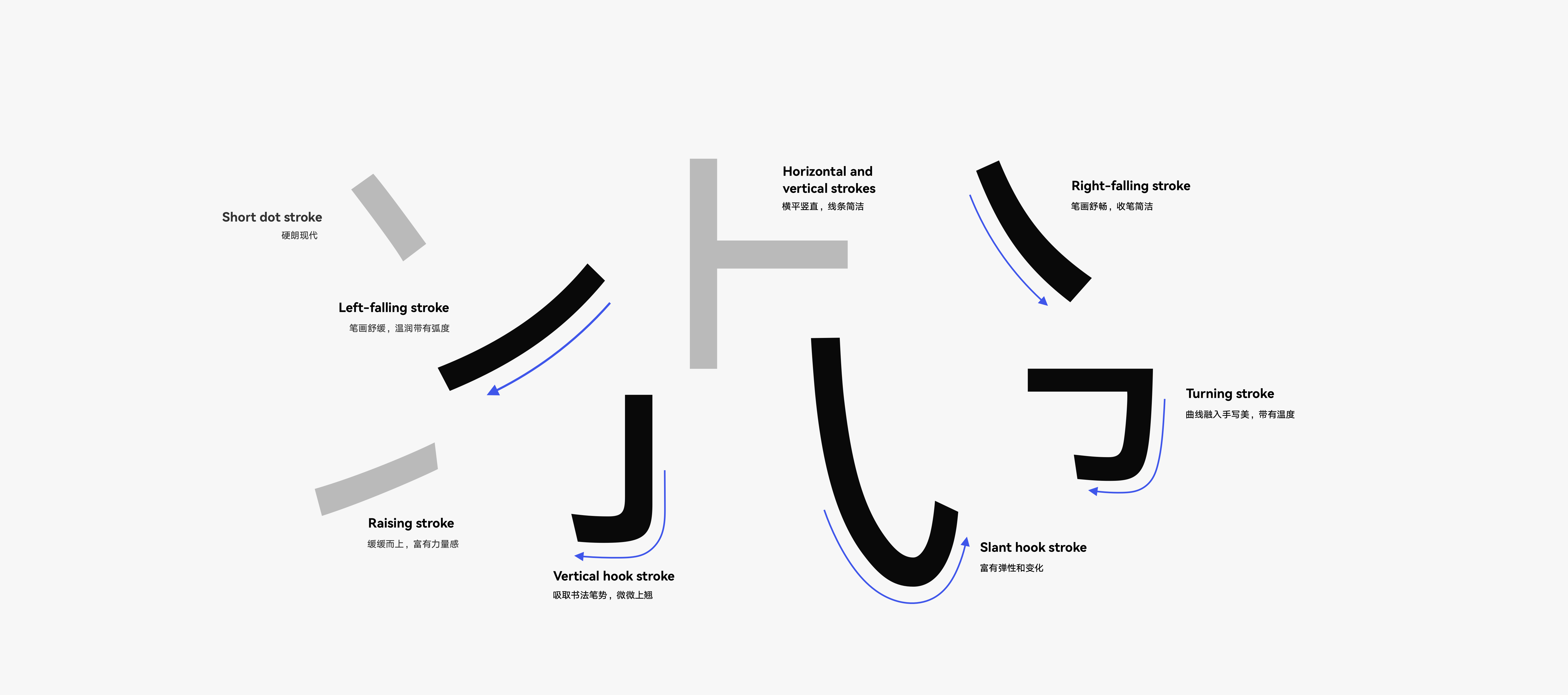

Brand New HarmonyOS Font

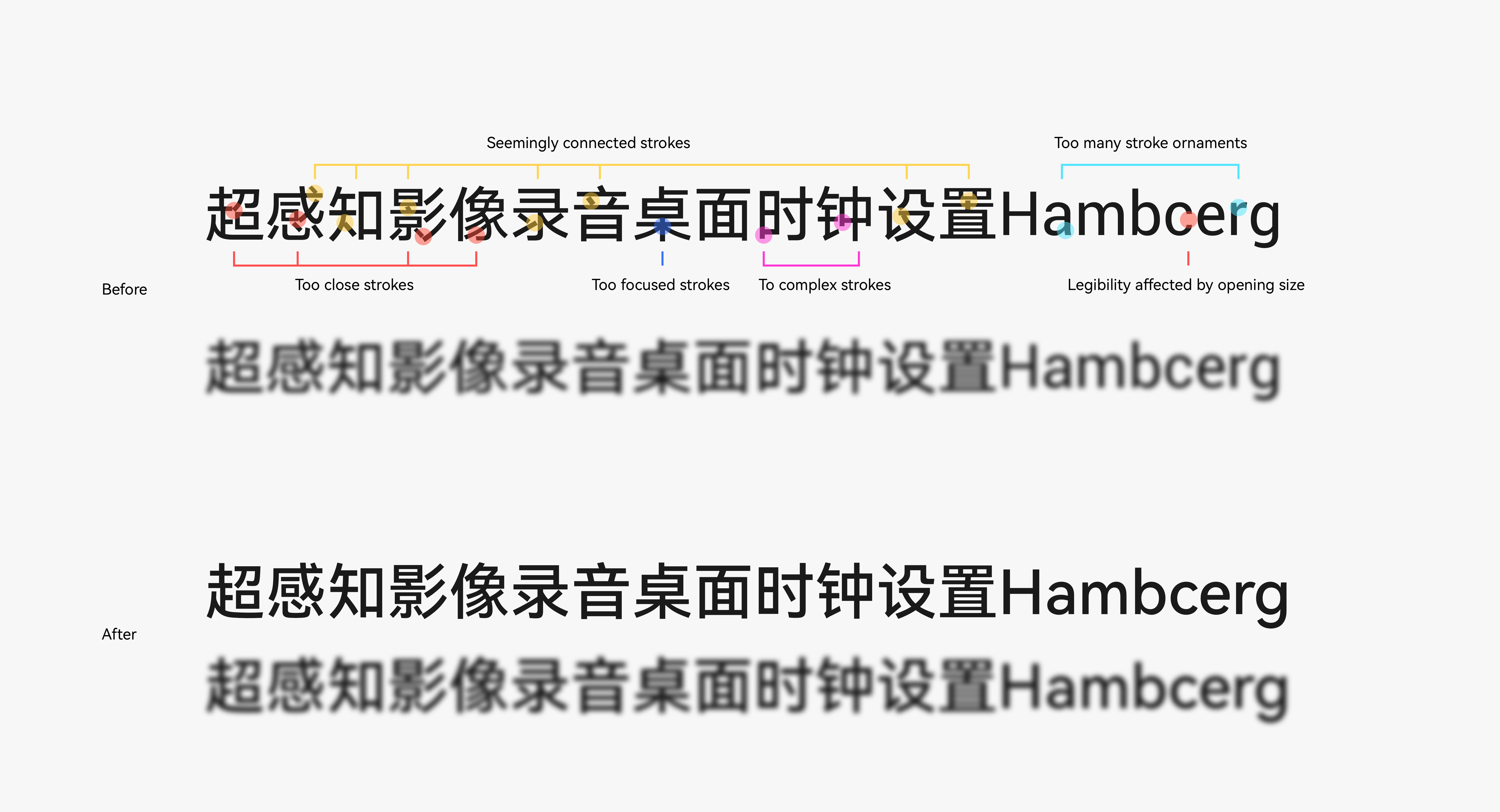

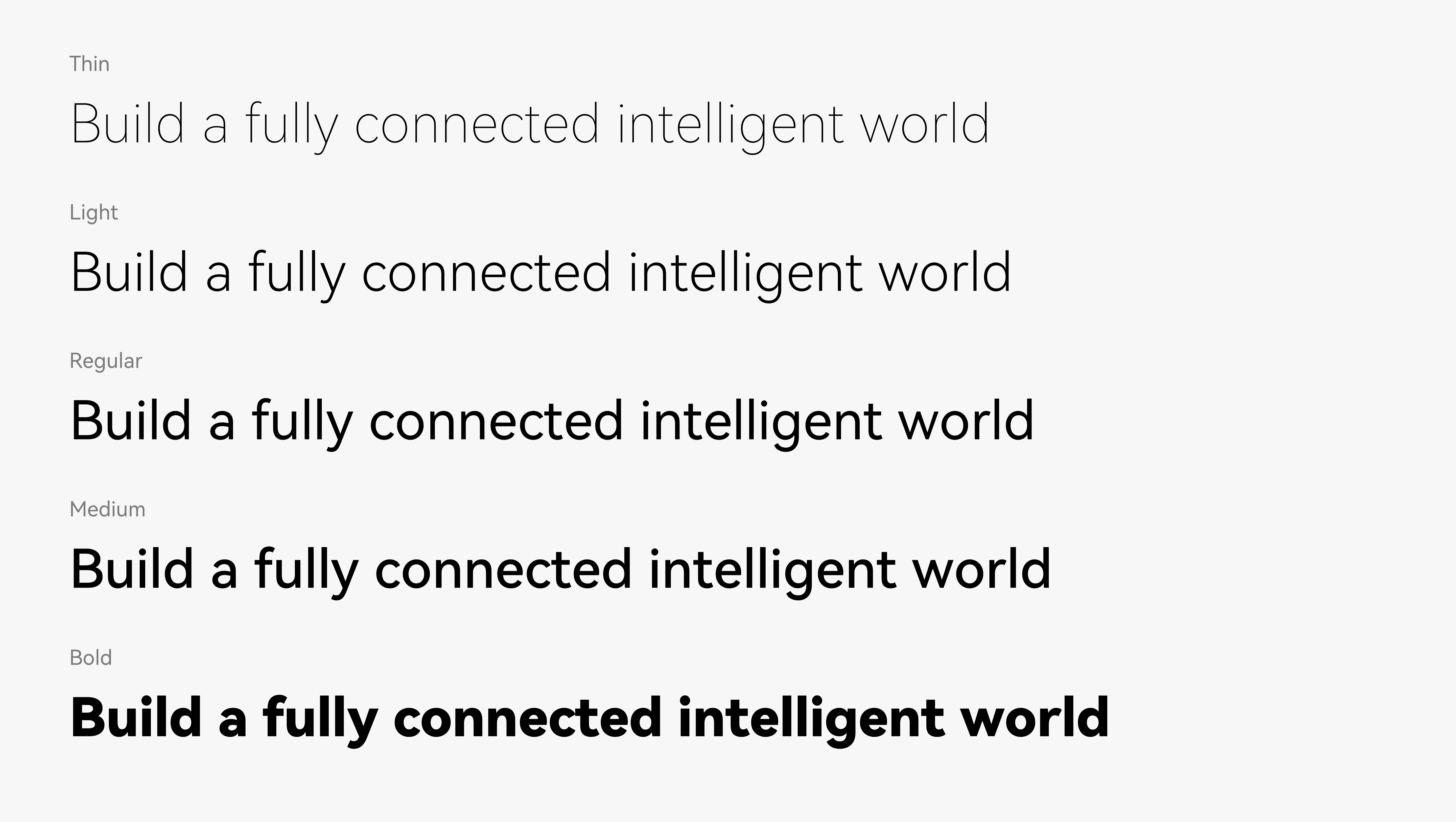

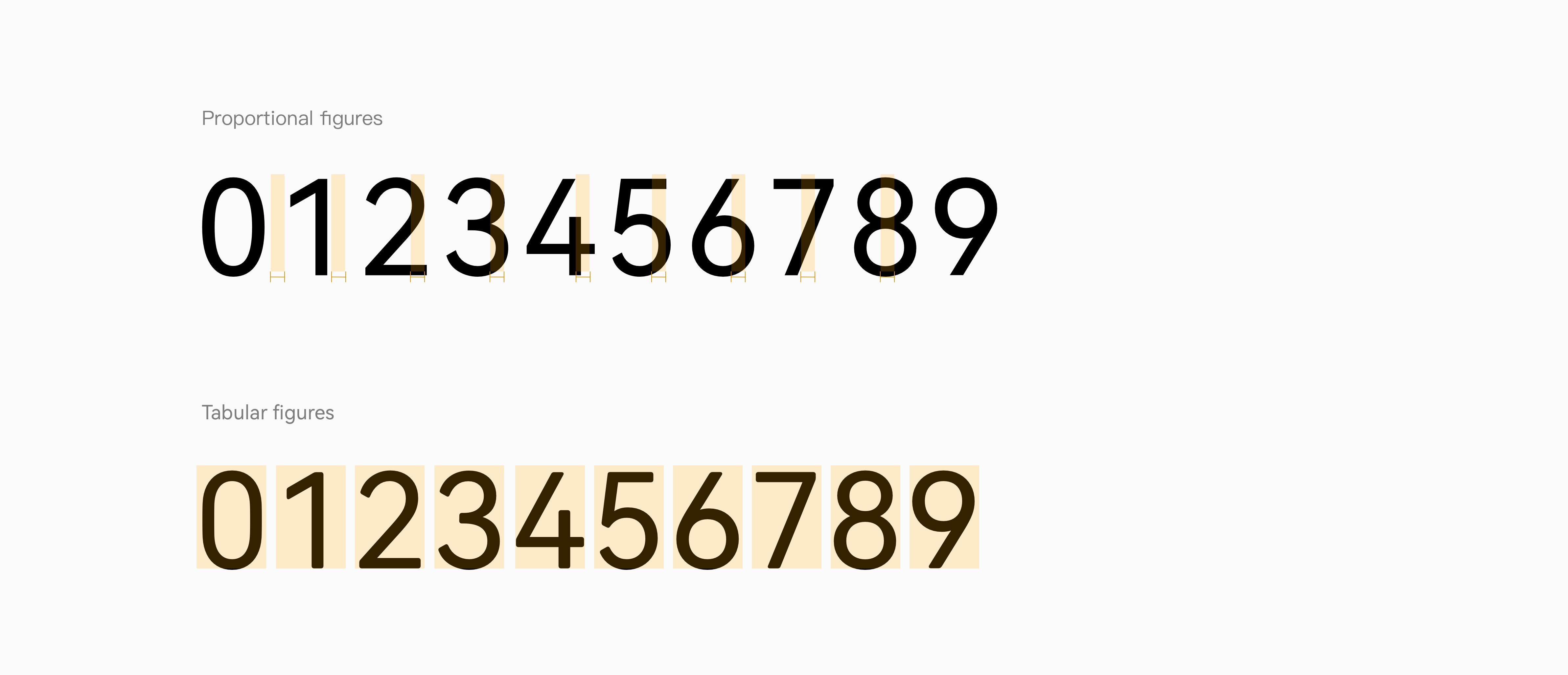

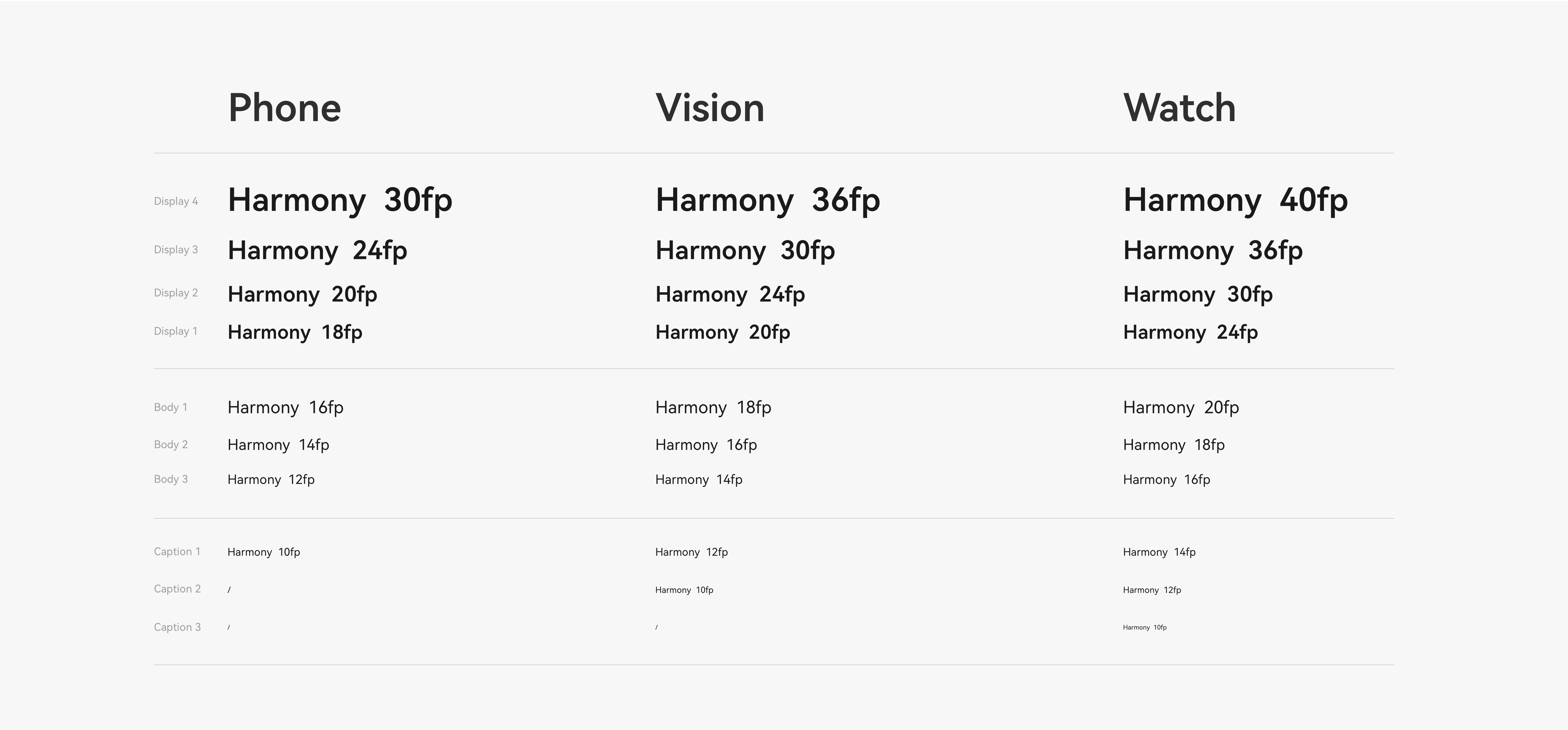

Based on users’ reading feedback on multiple terminals in different scenarios, a brand new default font — HarmonyOS Sans — has been designed for HarmonyOS with a variety of considerations, including the dimensions of different devices, usage scenarios, and different users’ requirements for font size and weight due to differences in line-of-sight and angle-of-view.

Downloading HarmonyOS Sans

Brand New Font Typeface Design