In this article, we will learn about metadata stores, the need for them, their components, and metadata store management.

Photo by Manuel Geissinger

Machine learning is predominantly data-driven, involving large amounts of raw and intermediate data. The goal is usually to create and deploy a model in production to be used by others for the greater good. To understand the model, it is necessary to retrieve and analyze the output of our ML model at various stages and the datasets used for its creation. Data about these data are called Metadata. Metadata is simply data about data.

In this article, we will learn about:

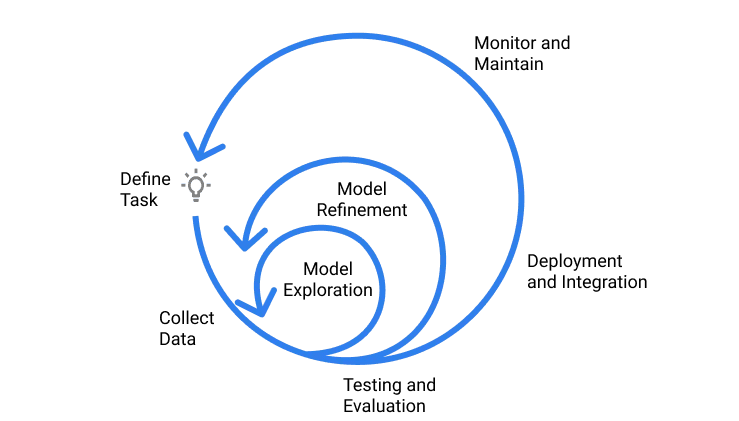

The process of building machine learning models is vaguely similar to conducting a science experiment from theory. You start with a hypothesis, design a model to test your hypothesis, test and improve your design to suit your hypothesis, and then pick the best method according to your data. With ML, you start with a hypothesis about which input data might produce desired results, train models, and tune the hyperparameters until you build the model which produces the results that you need.

Fig: machine learning Development Lifecycle. [Source]

Storing these experimental data helps with comparability and reproducibility. The iterative model building process will be in vain if the experimental models’ parameters aren’t comparable. Reproducibility is also essential if there is a need to reproduce previous results. Given the dynamic and stochastic nature of ML experiments, achieving reproducibility is complicated.

Storing the metadata would help in retraining the same model and get the same results. With so much experimental data flowing through the pipeline, it’s essential to segregate the metadata of each experimental model from input data. Hence, the need to have a metadata store, i.e., a database with metadata.

Now that we have discussed why storing metadata is important, let’s look at the different types of metadata.

Data used for model training and evaluation play a dominant role in comparability and reproducibility. Other than data, you can store the following:

The experiment is usually to lock down on a model fitted to our business needs. Until the end of the experiment, it’s hard to put a pin on which model to proceed with. So, it is useful and saves a lot of time if all the experimental models are reproducible. It should be noted that we are focused on the models to be reproducible rather than retrievable. To retrieve a model, one has to store all the models taking up too much space. This is avoidable as the following parameters help one reproduce a model when needed.

The raw data has already been accounted for and saved to be retrieved. But this raw data is not always fed to the model for training. In most cases, the crucial information which the model needs, i.e., the features, are picked from the raw data and become the model’s input.

Now, since we aim for reproducibility, we need to guarantee consistency in the way the selected features are processed, and hence, the feature preprocessing steps need to be saved. Some examples of the preprocessing steps are feature augmentation, dealing with missing values, transforming it into a different format that the model requires, etc.

To recreate the model, store the type of model used like AlexNet, YoloV4, Random Forest, SVM, etc., with their versions and frameworks like PyTorch, Tensorflow, and Scikit-learn. This ensures there is no ambiguity in the selection of the model when reproducing it.

The ML model usually has a loss or cost function. To create a robust and efficient model, we aim to minimize the loss function. The weights and biases of the model where the loss function is minimized are the hyperparameters that need to be stored to reproduce the efficient model created earlier. This saves processing time in finding the right hyperparameters to tune the model and speeds the model selection process.

The results from the model evaluation are important in understanding how well you have built your model. They help in figuring out :

Storing these data helps in performing model evaluation at any given point in time.

Model context is information about the environment of an ML experiment that may or may not affect the experiment’s output but could be a factor of change in it.

Model context includes:

Now, we know about metadata, the need for it, and what comprises a metadata store. Let’s look at some of the use cases of metadata stores and how you can use them in your ML workflow:

Just storing the metadata without any management is like keeping thousands of books unorganized. We are storing these data for boosting our model building. Without any management, it’s harder to retrieve the data and compromises its reproducibility. Metadata management ensures data governance.

Here is a comprehensive list of why metadata management is necessary:

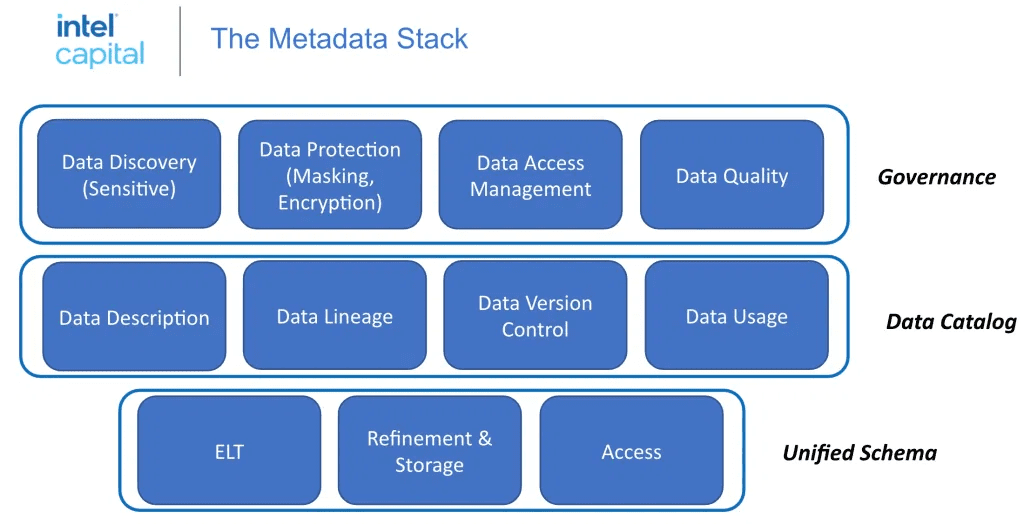

A podcast on Rise of Meta management by Assaf Araki and Ben Lorica beautifully explains the three building blocks of metadata management systems.

Fig: Metadata Stack. [Source]

The metadata needs to be collected from all systems. The three components in this layer do this job:

Since it takes advantage of the processing capability already built into a data storage infrastructure, ELT reduces the time data spends in transit and boosts efficiency.

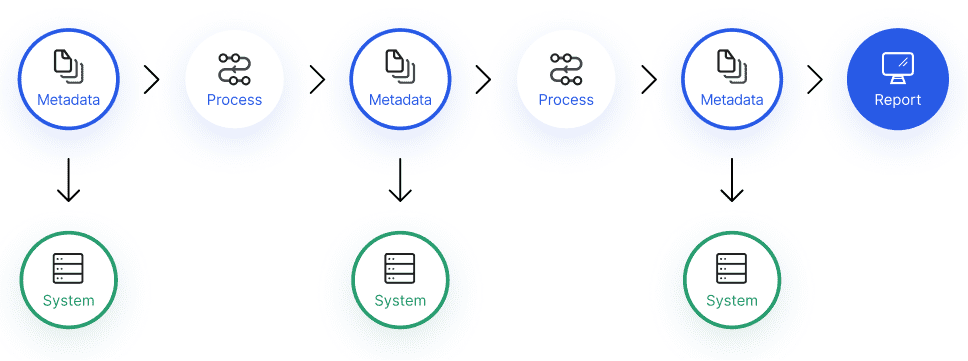

Now that we have collected the data in the previous layer, the data needs to be categorized into a catalog to make it informative and reliable. Its four components help in achieving this task:

Fig: Data Lineage process [Source]

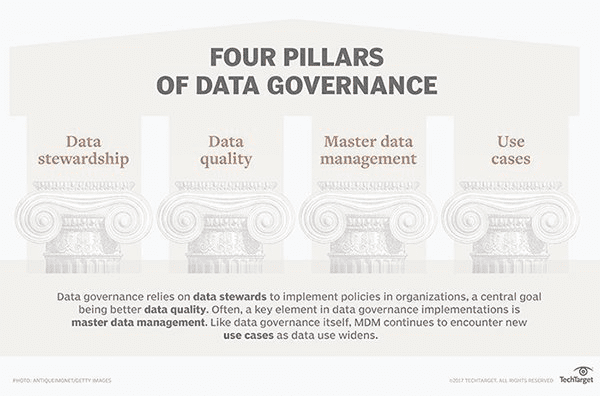

As the name suggests, this layer collectively works to govern the data and ensure that the data is consistent and secure. It is the process organizations use to manage, utilize and protect data in enterprise systems.

Fig: Pillars of Data Governance.[Source]

Learn more about Data Governance Guide.

Coming to the components that ensure data governance:

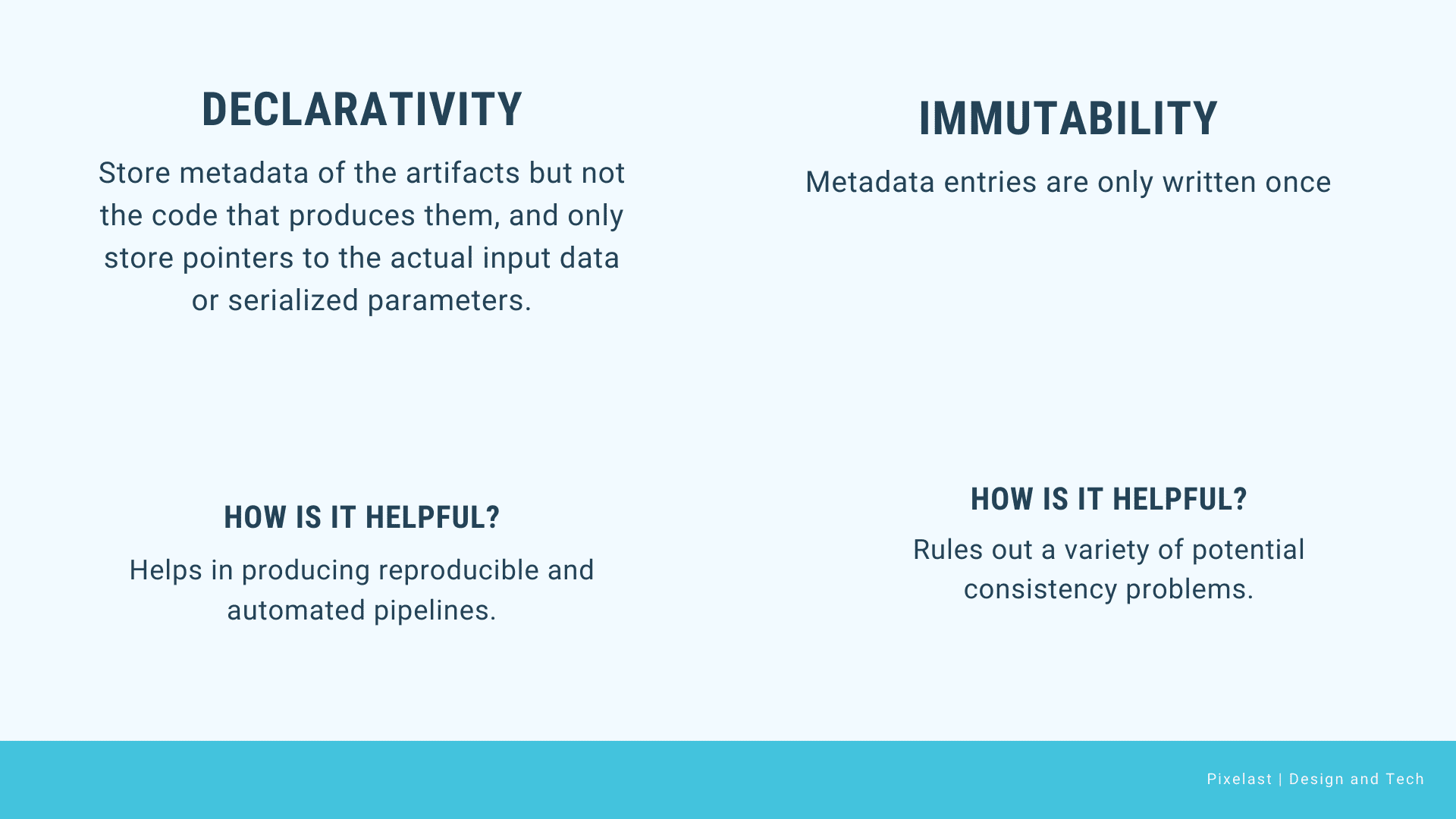

Declarative metadata management is a lightweight system that tracks the lineage of the artifacts produced during your ML workflow. It automatically extracts metadata such as hyperparameters of models, schemas of datasets, and architecture of deep neural networks.

A Declarative metadata system can be used to enable a variety of functionalities like:

Here, the experimental metadata and ML pipelines that comprise a way to define complex feature transformation chains are automatically extracted, easing its metadata tracking system. It is achieved by a schema designed to store the lineage information and ML-specific attributes. The figure below shows the important principles of declarative metadata management.

Fig: Important principles of Declarative Metadata Management. Source: Graphical representation of principles discussed in DMM – Image created by the author

The systems employ a three-layered architecture to use the above-discussed principles.

The high-level clients in level 3 enable automated metadata extraction from internal data structures of popular ML frameworks.

Let’s take examples from some of these frameworks to get a clearer understanding.

Now that we have a brief understanding of declarative metadata management, let’s see how these properties improve your ML workflow:

Suppose one wishes to take the Declarative Metadata Management to the next level instead of automatically extracting metadata. In that case, one could enable meta learning which would recommend features, algorithms, or hyperparameters settings for new datasets. This again requires implementing the automated computation of meta features for contained datasets and similarity queries to find the most similar datasets for new data based on these meta-features.

Before going into more detail about metadata stores, let’s look at important features that would help you select the right metadata store.

Metadata needs to be stored for comparability and reproducibility of the experimental ML models. The common metadata stored are the hyperparameters, feature preprocessing steps, model information, and the context of the model. The data needs to be managed as well.

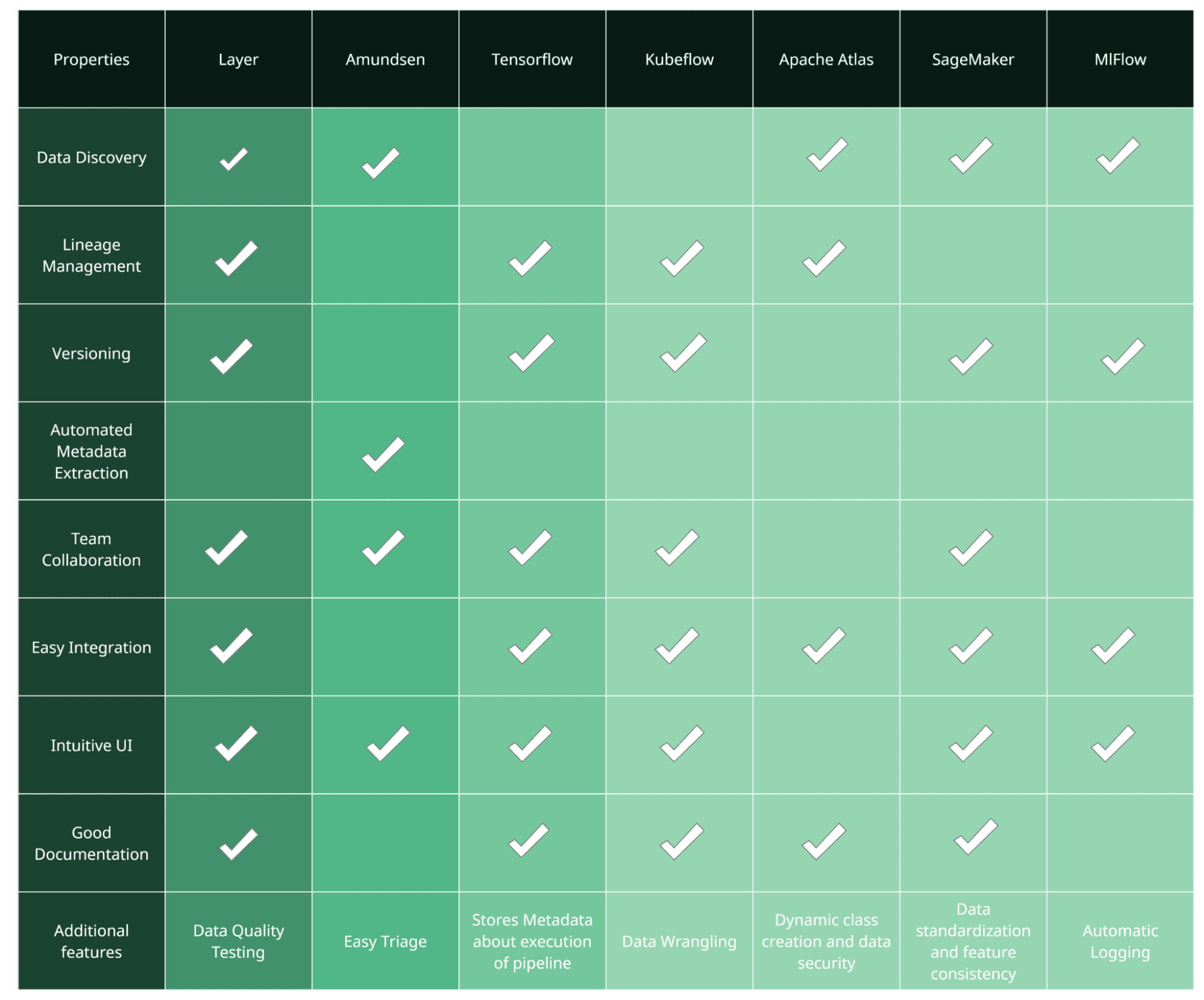

Previously, we also looked into the qualities one might consider for choosing the right metadata store. With all of these in mind, let’s have a look at some of the widely used metadata stores.

Layer provides a central repository for datasets and features to be systematically built, monitored and evaluated. Layer is unique from other metadata stores for being a declarative tool that empowers it to provide automated entity extraction and automated pipelines . In addition, it provides data management, monitoring and search tools, etc.

Some of its features are:

Layer provides a powerful search for easy discovery of data while adhering to authorization and governance rules. There are two central repositories, one for data and the other for models. The data catalog manages and versions the data and features.

The model catalog has all the ML models organized and versioned in centralized storage space, making it accessible to recall a model used in experimentation.

With datasets, schemas changing, files being deleted, and pipelines breaking, auto versioning helps create reproducible ML pipelines. This automatic tracking of lineage between entities streamlines your ML workflow and helps in reproducing the experiments.

The feature store is unique to Layer. The features are grouped into `featuresets` which make features more accessible. These `featuresets` can be dynamically created, deleted, passed as value, or stored. You can simply build features, and Layer gives you the privilege to serve them online or offline.

Layer ensures good quality data by executing automated tests. It assists you in creating responsive or automated pipelines with Declarative Metadata Management (discussed earlier) to automatically build, test and deploy the data and ML models. This ensures not only quality testing of data but also continuous delivery and deployment.

The data is religiously tracked and managed by Layer. The tracking is done automatically between versioned and immutable entities(data, model, feature, etc.). As at any given point, one could reproduce the experiments. Layer also gives a better understanding of the previous experiments.

Most of the properties of the Layer Data Catalog work hand in hand with automation. The datasets, featureset, and ML models are also first-class entities that make it easy to manage their lifecycle at scale. Layer also has infra agnostic pipelines which support resources for scalability.

Along with empowering the data teams with its features during experimentation, it can also be used to monitor the lifecycle of the entities post-production. Its extensive UI supports tracking drift, monitoring changes, version diffing, etc.

Amundsen is a metadata and data discovery engine. Some of its features are:

ML Metadata or MLMD is a library whose sole purpose is to serve as a Metadata Store. It stores and documents the metadata and, when called, retrieves it from the storage backend with the help of APIs.

Some of its features are:

Kubeflow not only offers a Metadata store but a solution for the entire lifecycle of your enterprise ML workflow.

The one we are interested in now is KubeFlow Metadata. Some of its features are:

Apache Atlas’s metadata store provides features that allow organizations to manage and govern the metadata. Some of its features are:

Amazon SageMaker feature store is a fully managed repository to store, update, retrieve and share machine learning features. It keeps track of the metadata of the stored features.

So if you are keen on storing metadata of features and not of the whole pipeline, the Sagemaker feature store is the right choice for you. Here are some of its other features:

The metadata store in MLflow goes by the name MLflow Tracking. It records and queries the code, data, configurations, and results from the experiments.

MLflow logs parameters, code versions, metrics, key-value input parameters, etc., when running machine learning code and later the API and UI helping in visualizing the results too. Some of its features are:

One cannot deny the importance of data in the field of machine learning. A metadata store that has all the essential data about the data is undeniably important. According to different business needs, the right metadata store could vary from organization to organization.

I have accumulated and summarized the seven metadata stores we discussed earlier. I hope this would give you an overview of some famous metadata stores and nudge you into finding the right one. This conclusion is based purely on the information found in the documentation in the metadata stores.

Akruti Acharya is a technical content writer and graduate student at University of Birmingham

Get the FREE collection of 50+ data science cheatsheets and the leading newsletter on AI, Data Science, and Machine Learning, straight to your inbox.

By subscribing you accept KDnuggets Privacy Policy

Get the FREE collection of 50+ data science cheatsheets and the leading newsletter on AI, Data Science, and Machine Learning, straight to your inbox.

By subscribing you accept KDnuggets Privacy Policy

Subscribe To Our Newsletter (Get 50+ FREE Cheatsheets)

Get the FREE collection of 50+ data science cheatsheets and the leading newsletter on AI, Data Science, and Machine Learning, straight to your inbox.

By subscribing you accept KDnuggets Privacy Policy

Get the FREE collection of 50+ data science cheatsheets and the leading newsletter on AI, Data Science, and Machine Learning, straight to your inbox.

By subscribing you accept KDnuggets Privacy Policy