Photo Credit: James Owen

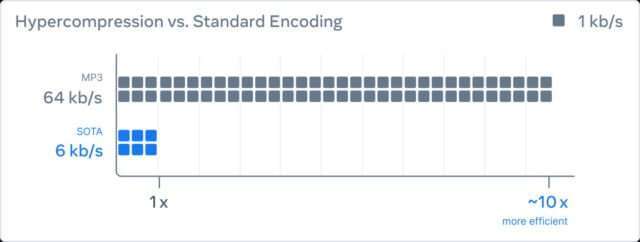

The audio compression method is called ‘EnCodec,’ which Meta debuted on October 25 in a paper titled by ‘High Fidelity Neural Audio Compression,’ authored by several Meta researchers. Meta gave a brief summary of the research that went into the compression codec on its blog.

Meta says its method is a three-party system that is trained to compress audio to the desired target size. The encoder transforms uncompressed data into a lower frame rate ‘latent space’ representation. The ‘quantizer’ compresses the new representation to the target size, while keeping track of the important information to rebuild the signal. The compressed signal is sent, and a decoder turns that signal into audio in real-time using a neural network.

“To do so, we use discriminators to improve the perceptual quality of the generated samples. This creates a cat-and-mouse game where the discriminator’s job is to differentiate between real samples and reconstructed samples. The compression model attempts to generate samples to fool the discriminators by pushing the reconstructed samples to be more perceptually similar to the original samples.”

Meta researchers claim they are the first group to apply a neural network for audio compression to 48 kHz stereo audio–just a hair better than CD’s 44.1 kHz sampling rate. Meta seems to be aiming the technology at delivering voice calls over bad network connections with smaller files, but there are also metaverse applications too.

Researchers say EnCodec could eventually deliver “rich metaverse experiences without requiring major bandwidth improvements.” The technology is still in research, but it appears Meta is focused on new ways to deliver high-quality audio across a network, no matter the conditions.