Research in the field of machine learning and AI, now a key technology in practically every industry and company, is far too voluminous for anyone to read it all. This column, Perceptron, aims to collect some of the most relevant recent discoveries and papers — particularly in, but not limited to, artificial intelligence — and explain why they matter.

An “earable” that uses sonar to read facial expressions was among the projects that caught our eyes over these past few weeks. So did ProcTHOR, a framework from the Allen Institute for AI (AI2) that procedurally generates environments that can be used to train real-world robots. Among the other highlights, Meta created an AI system that can predict a protein’s structure given a single amino acid sequence. And researchers at MIT developed new hardware that they claim offers faster computation for AI with less energy.

The “earable,” which was developed by a team at Cornell, looks something like a pair of bulky headphones. Speakers send acoustic signals to the side of a wearer’s face, while a microphone picks up the barely-detectable echoes created by the nose, lips, eyes, and other facial features. These “echo profiles” enable the earable to capture movements like eyebrows raising and eyes darting, which an AI algorithm translates into complete facial expressions.

Image Credits: Cornell

Image Credits: Cornell

The earable has a few limitations. It only lasts three hours on battery and has to offload processing to a smartphone, and the echo-translating AI algorithm must train on 32 minutes of facial data before it can begin recognizing expressions. But the researchers make the case that it’s a much sleeker experience than the recorders traditionally used in animations for movies, TV, and video games. For example, for the mystery game L.A. Noire, Rockstar Games built a rig with 32 cameras trained on each actor’s face.

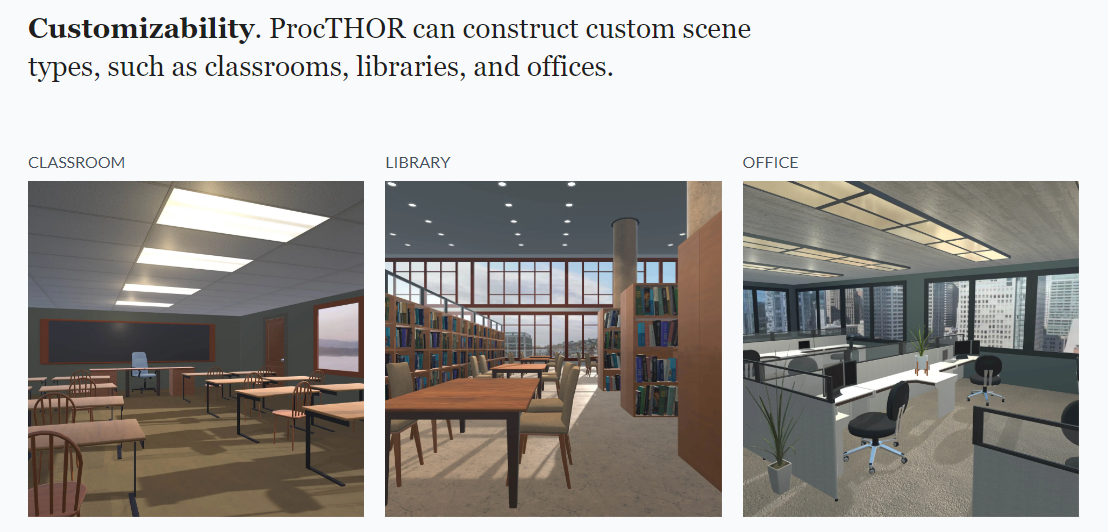

Perhaps someday, Cornell’s earable will be used to create animations for humanoid robots. But those robots will have to learn how to navigate a room first. Fortunately, AI2’s ProcTHOR takes a step (no pun intended) in this direction, creating thousands of custom scenes including classrooms, libraries, and offices in which simulated robots must complete tasks, like picking up objects and moving around furniture.

The idea behind the scenes, which have simulated lighting and contain a subset of a massive array of surface materials (e.g., wood, tile, etc.) and household objects, is to expose the simulated robots to as much variety as possible. It’s a well-established theory in AI that performance in simulated environments can improve the performance of real-world systems; autonomous car companies like Alphabet’s Waymo simulate entire neighborhoods to fine-tune how their real-world cars behave.

Image Credits: Allen Institute for Artificial Intelligence

Image Credits: Allen Institute for Artificial Intelligence

As for ProcTHOR, AI2 claims in a paper that scaling the number of training environments consistently improves performance. That bodes well for robots bound for homes, workplaces, and elsewhere.

Of course, training these types of systems requires a lot of compute power. But that might not be the case forever. Researchers at MIT say they’ve created an “analog” processor that can be used to create superfast networks of “neurons” and “synapses,” which in turn can be used to perform tasks like recognizing images, translating languages, and more.

The researchers’ processor uses “protonic programmable resistors” arranged in an array to “learn” skills. Increasing and decreasing the electrical conductance of the resistors mimics the strengthening and weakening of synapses between neurons in the brain, a part of the learning process.

The conductance is controlled by an electrolyte that governs the movement of protons. When more protons are pushed into a channel in the resistor, the conductance increases. When protons are removed, the conductance decreases.

Processor on a computer circuit board

Processor on a computer circuit board

An inorganic material, phosphosilicate glass, makes the MIT team’s processor extremely fast because it contains nanometer-sized pores whose surfaces provide the perfect paths for protein diffusion. As an added benefit, the glass can run at room temperature, and it isn’t damaged by the proteins as they move along the pores.

“Once you have an analog processor, you will no longer be training networks everyone else is working on,” lead author and MIT postdoc Murat Onen was quoted as saying in a press release. “You will be training networks with unprecedented complexities that no one else can afford to, and therefore vastly outperform them all. In other words, this is not a faster car, this is a spacecraft.”

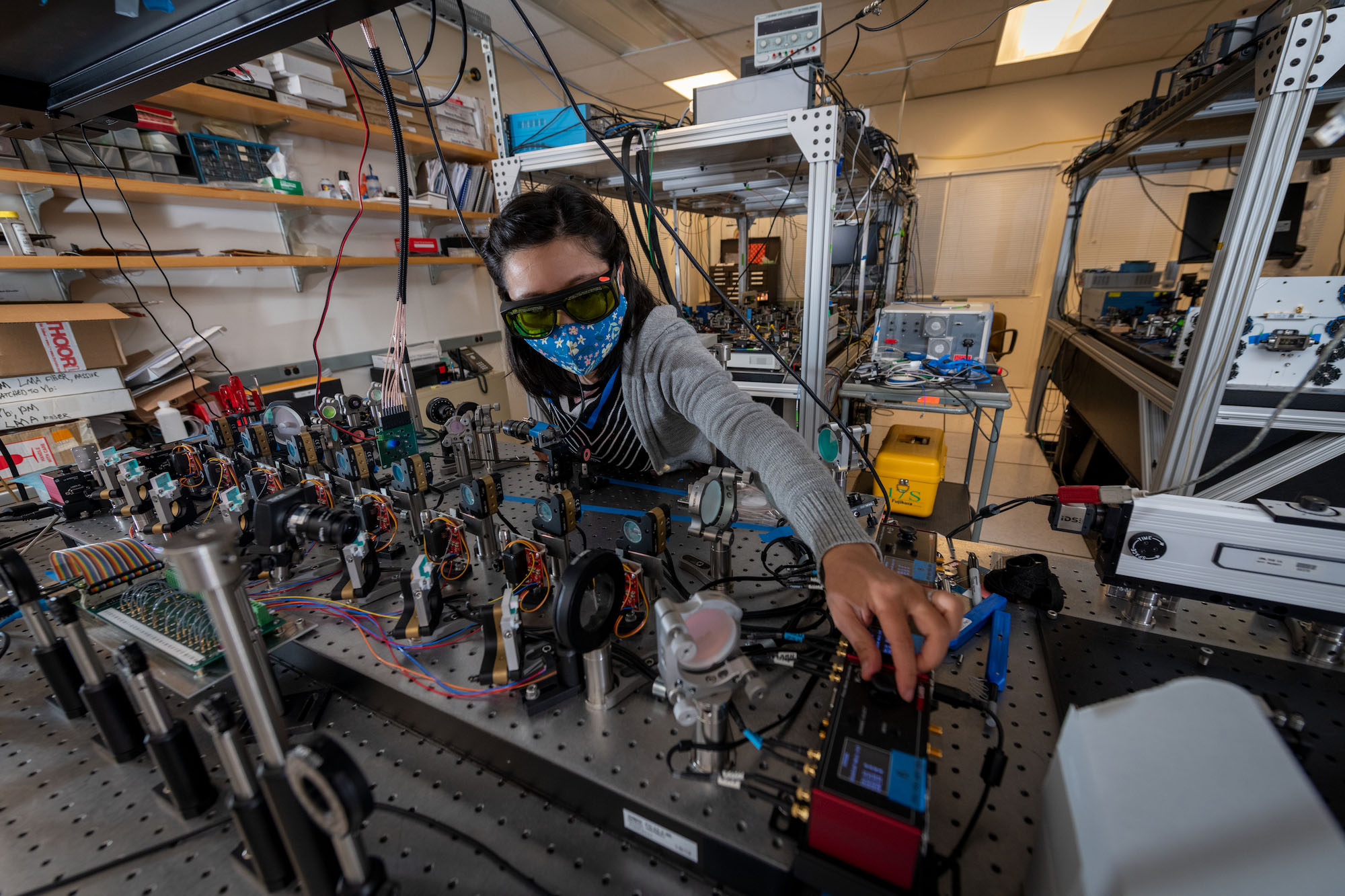

Speaking of acceleration, machine learning is now being put to use managing particle accelerators, at least in experimental form. At Lawrence Berkeley National Lab two teams have shown that ML-based simulation of the full machine and beam gives them a highly precise prediction as much as 10 times better than ordinary statistical analysis.

Image Credits: Thor Swift/Berkeley Lab

Image Credits: Thor Swift/Berkeley Lab

“If you can predict the beam properties with an accuracy that surpasses their fluctuations, you can then use the prediction to increase the performance of the accelerator,” said the lab’s Daniele Filippetto. It’s no small feat to simulate all the physics and equipment involved, but surprisingly the various teams’ early efforts to do so yielded promising results.

And over at Oak Ridge National Lab an AI-powered platform is letting them do Hyperspectral Computed Tomography using neutron scattering, finding optimal… maybe we should just let them explain.

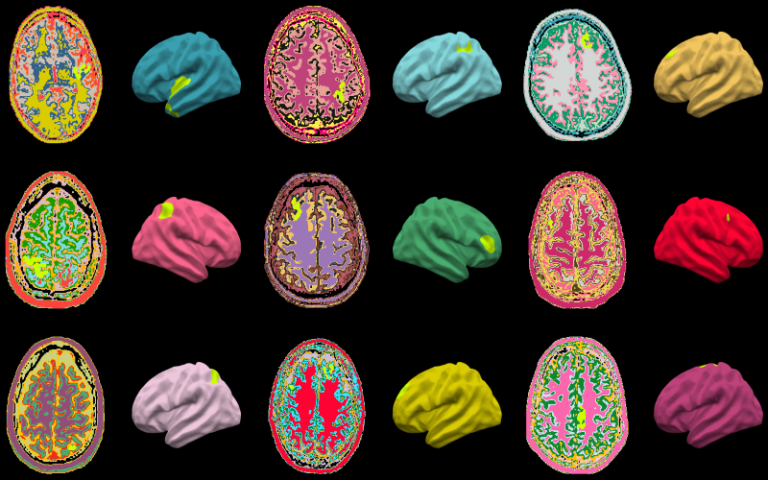

In the medical world, there’s a new application of machine learning-based image analysis in the field of neurology, where researchers at University College London have trained a model to detect early signs of epilepsy-causing brain lesions.

MRIs of brains used to train the UCL algorithm.

MRIs of brains used to train the UCL algorithm.

One frequent cause of drug-resistant epilepsy is what is known as a focal cortical dysplasia, a region of the brain that has developed abnormally but for whatever reason doesn’t appear obviously abnormal in MRI. Detecting it early can be extremely helpful, so the UCL team trained an MRI inspection model called Multicentre Epilepsy Lesion Detection on thousands of examples of healthy and FCD-affected brain regions.

The model was able to detect two thirds of the FCDs it was shown, which is actually quite good as the signs are very subtle. In fact, it found 178 cases where doctors were unable to locate an FCD but it could. Naturally the final say goes to the specialists, but a computer hinting that something might be wrong can sometimes be all it takes to look closer and get a confident diagnosis.

“We put an emphasis on creating an AI algorithm that was interpretable and could help doctors make decisions. Showing doctors how the MELD algorithm made its predictions was an essential part of that process,” said UCL’s Mathilde Ripart.