The November 2022 issue of IEEE Spectrum is here!

IEEE websites place cookies on your device to give you the best user experience. By using our websites, you agree to the placement of these cookies. To learn more, read our Privacy Policy.

A brain-computer interface deciphers commands intended for the vocal tract

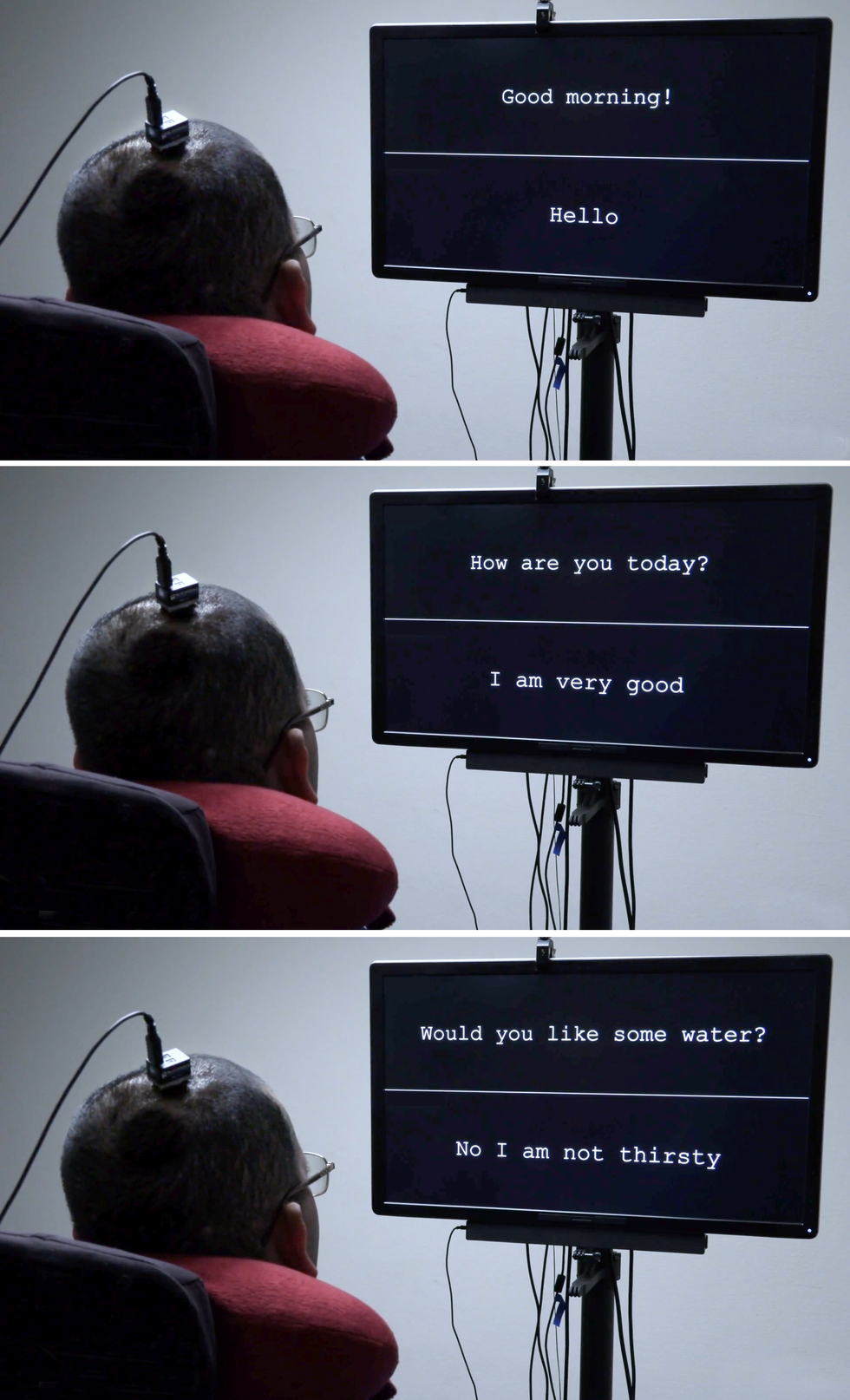

A paralyzed man who hasn’t spoken in 15 years uses a brain-computer interface that decodes his intended speech, one word at a time.

A computer screen shows the question “Would you like some water?” Underneath, three dots blink, followed by words that appear, one at a time: “No I am not thirsty.”

It was brain activity that made those words materialize—the brain of a man who has not spoken for more than 15 years, ever since a stroke damaged the connection between his brain and the rest of his body, leaving him mostly paralyzed. He has used many other technologies to communicate; most recently, he used a pointer attached to his baseball cap to tap out words on a touchscreen, a method that was effective but slow. He volunteered for my research group’s clinical trial at the University of California, San Francisco in hopes of pioneering a faster method. So far, he has used the brain-to-text system only during research sessions, but he wants to help develop the technology into something that people like himself could use in their everyday lives.

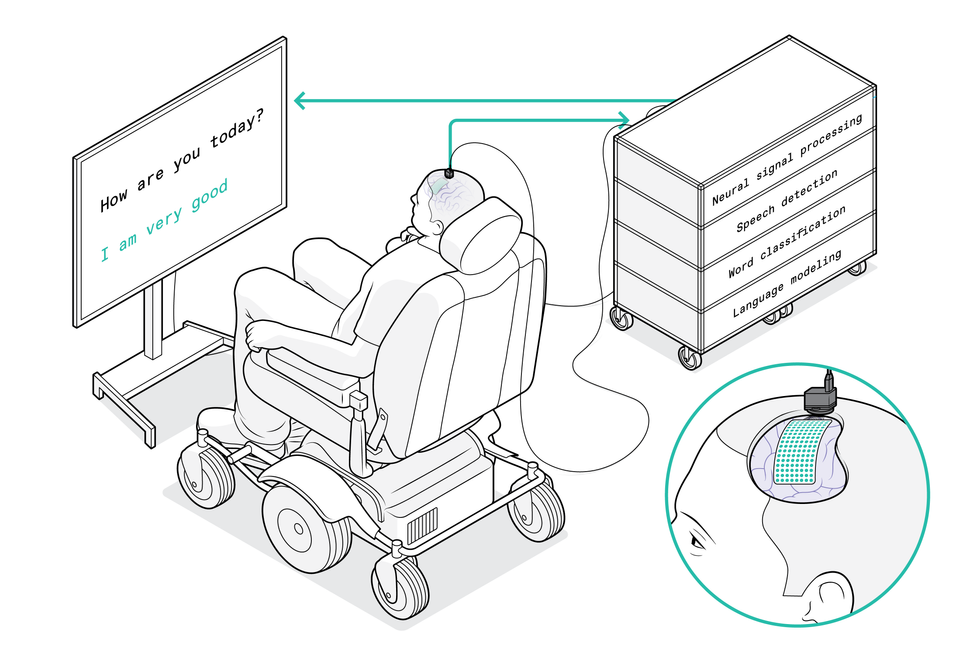

In our pilot study, we draped a thin, flexible electrode array over the surface of the volunteer’s brain. The electrodes recorded neural signals and sent them to a speech decoder, which translated the signals into the words the man intended to say. It was the first time a paralyzed person who couldn’t speak had used neurotechnology to broadcast whole words—not just letters—from the brain.

That trial was the culmination of more than a decade of research on the underlying brain mechanisms that govern speech, and we’re enormously proud of what we’ve accomplished so far. But we’re just getting started. My lab at UCSF is working with colleagues around the world to make this technology safe, stable, and reliable enough for everyday use at home. We’re also working to improve the system’s performance so it will be worth the effort.

Neuroprosthetics have come a long way in the past two decades. Prosthetic implants for hearing have advanced the furthest, with designs that interface with the cochlear nerve of the inner ear or directly into the auditory brain stem. There’s also considerable research on retinal and brain implants for vision, as well as efforts to give people with prosthetic hands a sense of touch. All of these sensory prosthetics take information from the outside world and convert it into electrical signals that feed into the brain’s processing centers.

The opposite kind of neuroprosthetic records the electrical activity of the brain and converts it into signals that control something in the outside world, such as a robotic arm, a video-game controller, or a cursor on a computer screen. That last control modality has been used by groups such as the BrainGate consortium to enable paralyzed people to type words—sometimes one letter at a time, sometimes using an autocomplete function to speed up the process.

For that typing-by-brain function, an implant is typically placed in the motor cortex, the part of the brain that controls movement. Then the user imagines certain physical actions to control a cursor that moves over a virtual keyboard. Another approach, pioneered by some of my collaborators in a 2021 paper, had one user imagine that he was holding a pen to paper and was writing letters, creating signals in the motor cortex that were translated into text. That approach set a new record for speed, enabling the volunteer to write about 18 words per minute.

In my lab’s research, we’ve taken a more ambitious approach. Instead of decoding a user’s intent to move a cursor or a pen, we decode the intent to control the vocal tract, comprising dozens of muscles governing the larynx (commonly called the voice box), the tongue, and the lips.

I began working in this area more than 10 years ago. As a neurosurgeon, I would often see patients with severe injuries that left them unable to speak. To my surprise, in many cases the locations of brain injuries didn’t match up with the syndromes I learned about in medical school, and I realized that we still have a lot to learn about how language is processed in the brain. I decided to study the underlying neurobiology of language and, if possible, to develop a brain-machine interface (BMI) to restore communication for people who have lost it. In addition to my neurosurgical background, my team has expertise in linguistics, electrical engineering, computer science, bioengineering, and medicine. Our ongoing clinical trial is testing both hardware and software to explore the limits of our BMI and determine what kind of speech we can restore to people.

Speech is one of the behaviors that sets humans apart. Plenty of other species vocalize, but only humans combine a set of sounds in myriad different ways to represent the world around them. It’s also an extraordinarily complicated motor act—some experts believe it’s the most complex motor action that people perform. Speaking is a product of modulated air flow through the vocal tract; with every utterance we shape the breath by creating audible vibrations in our laryngeal vocal folds and changing the shape of the lips, jaw, and tongue.

Many of the muscles of the vocal tract are quite unlike the joint-based muscles such as those in the arms and legs, which can move in only a few prescribed ways. For example, the muscle that controls the lips is a sphincter, while the muscles that make up the tongue are governed more by hydraulics—the tongue is largely composed of a fixed volume of muscular tissue, so moving one part of the tongue changes its shape elsewhere. The physics governing the movements of such muscles is totally different from that of the biceps or hamstrings.

Because there are so many muscles involved and they each have so many degrees of freedom, there’s essentially an infinite number of possible configurations. But when people speak, it turns out they use a relatively small set of core movements (which differ somewhat in different languages). For example, when English speakers make the “d” sound, they put their tongues behind their teeth; when they make the “k” sound, the backs of their tongues go up to touch the ceiling of the back of the mouth. Few people are conscious of the precise, complex, and coordinated muscle actions required to say the simplest word.

My research group focuses on the parts of the brain’s motor cortex that send movement commands to the muscles of the face, throat, mouth, and tongue. Those brain regions are multitaskers: They manage muscle movements that produce speech and also the movements of those same muscles for swallowing, smiling, and kissing.

Studying the neural activity of those regions in a useful way requires both spatial resolution on the scale of millimeters and temporal resolution on the scale of milliseconds. Historically, noninvasive imaging systems have been able to provide one or the other, but not both. When we started this research, we found remarkably little data on how brain activity patterns were associated with even the simplest components of speech: phonemes and syllables.

Here we owe a debt of gratitude to our volunteers. At the UCSF epilepsy center, patients preparing for surgery typically have electrodes surgically placed over the surfaces of their brains for several days so we can map the regions involved when they have seizures. During those few days of wired-up downtime, many patients volunteer for neurological research experiments that make use of the electrode recordings from their brains. My group asked patients to let us study their patterns of neural activity while they spoke words.

The hardware involved is called electrocorticography (ECoG). The electrodes in an ECoG system don’t penetrate the brain but lie on the surface of it. Our arrays can contain several hundred electrode sensors, each of which records from thousands of neurons. So far, we’ve used an array with 256 channels. Our goal in those early studies was to discover the patterns of cortical activity when people speak simple syllables. We asked volunteers to say specific sounds and words while we recorded their neural patterns and tracked the movements of their tongues and mouths. Sometimes we did so by having them wear colored face paint and using a computer-vision system to extract the kinematic gestures; other times we used an ultrasound machine positioned under the patients’ jaws to image their moving tongues.

We used these systems to match neural patterns to movements of the vocal tract. At first we had a lot of questions about the neural code. One possibility was that neural activity encoded directions for particular muscles, and the brain essentially turned these muscles on and off as if pressing keys on a keyboard. Another idea was that the code determined the velocity of the muscle contractions. Yet another was that neural activity corresponded with coordinated patterns of muscle contractions used to produce a certain sound. (For example, to make the “aaah” sound, both the tongue and the jaw need to drop.) What we discovered was that there is a map of representations that controls different parts of the vocal tract, and that together the different brain areas combine in a coordinated manner to give rise to fluent speech.

Our work depends on the advances in artificial intelligence over the past decade. We can feed the data we collected about both neural activity and the kinematics of speech into a neural network, then let the machine-learning algorithm find patterns in the associations between the two data sets. It was possible to make connections between neural activity and produced speech, and to use this model to produce computer-generated speech or text. But this technique couldn’t train an algorithm for paralyzed people because we’d lack half of the data: We’d have the neural patterns, but nothing about the corresponding muscle movements.

The smarter way to use machine learning, we realized, was to break the problem into two steps. First, the decoder translates signals from the brain into intended movements of muscles in the vocal tract, then it translates those intended movements into synthesized speech or text.

We call this a biomimetic approach because it copies biology; in the human body, neural activity is directly responsible for the vocal tract’s movements and is only indirectly responsible for the sounds produced. A big advantage of this approach comes in the training of the decoder for that second step of translating muscle movements into sounds. Because those relationships between vocal tract movements and sound are fairly universal, we were able to train the decoder on large data sets derived from people who weren’t paralyzed.

The next big challenge was to bring the technology to the people who could really benefit from it.

The National Institutes of Health (NIH) is funding our pilot trial, which began in 2021. We already have two paralyzed volunteers with implanted ECoG arrays, and we hope to enroll more in the coming years. The primary goal is to improve their communication, and we’re measuring performance in terms of words per minute. An average adult typing on a full keyboard can type 40 words per minute, with the fastest typists reaching speeds of more than 80 words per minute.

We think that tapping into the speech system can provide even better results. Human speech is much faster than typing: An English speaker can easily say 150 words in a minute. We’d like to enable paralyzed people to communicate at a rate of 100 words per minute. We have a lot of work to do to reach that goal, but we think our approach makes it a feasible target.

The implant procedure is routine. First the surgeon removes a small portion of the skull; next, the flexible ECoG array is gently placed across the surface of the cortex. Then a small port is fixed to the skull bone and exits through a separate opening in the scalp. We currently need that port, which attaches to external wires to transmit data from the electrodes, but we hope to make the system wireless in the future.

We’ve considered using penetrating microelectrodes, because they can record from smaller neural populations and may therefore provide more detail about neural activity. But the current hardware isn’t as robust and safe as ECoG for clinical applications, especially over many years.

Another consideration is that penetrating electrodes typically require daily recalibration to turn the neural signals into clear commands, and research on neural devices has shown that speed of setup and performance reliability are key to getting people to use the technology. That’s why we’ve prioritized stability in creating a “plug and play” system for long-term use. We conducted a study looking at the variability of a volunteer’s neural signals over time and found that the decoder performed better if it used data patterns across multiple sessions and multiple days. In machine-learning terms, we say that the decoder’s “weights” carried over, creating consolidated neural signals.

University of California, San Francisco

Because our paralyzed volunteers can’t speak while we watch their brain patterns, we asked our first volunteer to try two different approaches. He started with a list of 50 words that are handy for daily life, such as “hungry,” “thirsty,” “please,” “help,” and “computer.” During 48 sessions over several months, we sometimes asked him to just imagine saying each of the words on the list, and sometimes asked him to overtly try to say them. We found that attempts to speak generated clearer brain signals and were sufficient to train the decoding algorithm. Then the volunteer could use those words from the list to generate sentences of his own choosing, such as “No I am not thirsty.”

We’re now pushing to expand to a broader vocabulary. To make that work, we need to continue to improve the current algorithms and interfaces, but I am confident those improvements will happen in the coming months and years. Now that the proof of principle has been established, the goal is optimization. We can focus on making our system faster, more accurate, and—most important— safer and more reliable. Things should move quickly now.

Probably the biggest breakthroughs will come if we can get a better understanding of the brain systems we’re trying to decode, and how paralysis alters their activity. We’ve come to realize that the neural patterns of a paralyzed person who can’t send commands to the muscles of their vocal tract are very different from those of an epilepsy patient who can. We’re attempting an ambitious feat of BMI engineering while there is still lots to learn about the underlying neuroscience. We believe it will all come together to give our patients their voices back.

Edward Chang, the chair of neurological surgery at University of California, San Francisco, is developing brain-computer interface technology for people who have lost the ability to speak. His lab works on decoding brain signals associated with commands to the vocal tract, a project that requires not only today’s best neurotechnology hardware, but also powerful machine learning models.

I am an Electronics Engineer with ALS in Maryland. I have issues using my eye gaze computer. Any way I can help?

I am an Electronics Engineer with ALS in Houston. I use an eye gaze computer. Any way I can help? mem# 93366047

Frequency combs are key to optical chips that could cut Internet power consumption

Charles Q. Choi is a science reporter who contributes regularly to IEEE Spectrum. He has written for Scientific American, The New York Times, Wired, and Science, among others.

This is an illustration of a microring resonator with an integrated heater element, which is key to generating the frequency comb. The red graph represents the light pulse circulating inside the microring resonator.

A record-breaking optical chip can transmit 1.8 petabits—1.8 million gigabits—per second, roughly twice as much traffic as transmitted per second over the entire world's Internet, a new study finds.

Previous research transmitted up to 10.66 petabits per second over fiber optics. However, these experiments relied on bulky electronics. A compact microchip-based strategy could enable mass production and result in smaller footprints, lower costs, and lower energy consumption. Until now, the fastest single optical microchip supported data rates of 661 terabits—661,000 gigabits—per second.

The new chip uses the light from a single infrared laser to create a rainbow spectrum of many colors. All the resulting frequencies of light are a fixed specific frequency distance from one another, a bit like the teeth of the comb, which is why the device is called a frequency comb. Each frequency can be isolated and have such properties as its amplitude modulated to carry data. The frequencies can then be collected together and transmitted simultaneously over fiber optics.

“Several groups worldwide have shown that frequency combs can be used for data transmission instead of using individual lasers,” says study senior author Leif Katsuo Oxenløwe, an optical communications researcher at the Technical University of Denmark.

The new microchip emits light roughly 1,530 to 1,610 nanometers in wavelength. These fall into the C and L bands of telecommunications frequencies, two of five wavelength bands where optical fibers experience minimal signal loss.

In experiments, the scientists achieved 1.84 petabits per second over a 7.9-kilometer-long optical fiber using 223 wavelength channels. “This is the first time it is investigated just how much data a single frequency comb can carry,” Oxenløwe says.

Using conventional equipment, this data rate would require about 1,000 lasers, the researchers say. The new microchip could therefore help significantly reduce Internet power consumption. “You could save a thousand lasers out of your energy budget,” Oxenløwe says.

In addition, a computational model the scientists developed to examine the potential of frequency combs suggests that a single chip could achieve a data rate of up to 100 petabits per second if given a cable with thousands of fibers. “You can already today buy cables with thousands of fibers in them to transport large quantities of data around in data centers, so scaling to such numbers is actually realistic,” Oxenløwe says.

The researchers say they accomplish this staggering data rate by dividing one frequency comb into many copies and optically amplifying their signals. “The power and potential of frequency combs is thus far greater than I think most comb enthusiasts even dared to dream of,” Oxenløwe says.

Future research can integrate components such as the laser, data modulators, and amplifiers onto the optical chip, Oxenløwe says.

The scientists detailed their findings 20 October in the journal Nature Photonics.

Leslie Russell is the senior awards presentation manager for IEEE Awards Activities.

Each year IEEE pays tribute to technical professionals whose outstanding contributions have made a lasting impact on technology and the engineering profession for humanity. The IEEE Awards program seeks nominations annually for IEEE's top awards—Medals, Recognitions, and Technical Field Awards—that are given on behalf of the IEEE Board of Directors.

You don’t have to be an IEEE member to receive, nominate, or endorse someone for an award.

Nominations for 2024 Medals and Recognitions will be open from 1 December to 15 June 2023. All nominations must be submitted through the IEEE Awards online portal set up for Medals and Recognitions.

These awards are presented at the annual IEEE Honors Ceremony. The 2023 IEEE Vision, Innovation, and Challenges Summit and Honors Ceremony will be held on 5 May at the Hilton Atlanta. Planning for the 2023 event is currently underway, and more information will be announced in the coming months.

The IEEE Awards Board has an ongoing initiative to increase diversity among the selection committees and candidates, including their technical discipline, geography, and gender. You can help by nominating a colleague for one of the following awards.

IEEE Medal of Honor

For an exceptional contribution or an extraordinary career in the IEEE fields of interest.

SPONSOR: IEEE Foundation

IEEE Frances E. Allen Medal

For innovative work in computing leading to lasting impact on other aspects of engineering, science, technology, or society.

SPONSOR: IBM

IEEE Alexander Graham Bell Medal

For exceptional contributions to communications and networking sciences and engineering.

SPONSOR: Nokia Bell Labs

IEEE Mildred Dresselhaus Medal

For outstanding technical contributions in science and engineering of great impact to IEEE fields of interest.

SPONSOR:Google, LLC

IEEE Edison Medal

For a career of meritorious achievement in electrical science, electrical engineering, or the electrical arts.

SPONSOR:Samsung Electronics Co., Ltd.

IEEE Medal for Environmental and Safety Technologies

For outstanding accomplishments in the application of technology in the fields of interest of IEEE that improve the environment and/or public safety.

SPONSOR: Toyota Motor Corp.

IEEE Founders Medal

For outstanding contributions in the leadership, planning, and administration of affairs of great value to the electrical and electronics engineering profession.

SPONSOR: The IEEE Richard and Mary Jo Stanley Memorial Fund of the IEEE Foundation

IEEE Richard W. Hamming Medal

For exceptional contributions to information sciences, systems, and technology.

SPONSOR: Qualcomm, Inc.

IEEE Medal for Innovations in Healthcare Technology

For exceptional contributions to technologies and applications benefitting health care, medicine, and the health sciences.

SPONSOR: IEEE Engineering in Medicine and Biology Society

IEEE Nick Holonyak, Jr. Medal for Semiconductor Optoelectronic

For outstanding contributions to semiconductor optoelectronic devices and systems including high-efficiency semiconductor devices and electronics.

SPONSOR: Friends of Nick Holonyak, Jr.

IEEE Jack S. Kilby Signal Processing Medal

For outstanding achievements in signal processing.

SPONSOR: Apple

IEEE/RSE James Clerk Maxwell Medal

For groundbreaking contributions that have had an exceptional impact on the development of electronics and electrical engineering or related fields.

SPONSOR: ARM, Ltd.

IEEE James H. Mulligan, Jr. Education Medal

For a career of outstanding contributions to education in the fields of interest of IEEE.

SPONSORS: MathWorks, Lockheed Martin Corp., and the IEEE Life Members Fund

IEEE Jun-ichi Nishizawa Medal

For outstanding contributions to material and device science and technology, including practical application.

SPONSOR: The Jun-ichi Nishizawa Medal Fund

IEEE Robert N. Noyce Medal

For exceptional contributions to the microelectronics industry.

SPONSOR: Intel

IEEE Dennis J. Picard Medal for Radar Technologies and Applications

For outstanding accomplishments in advancing the fields of radar technologies and their applications.

SPONSOR: Raytheon Technologies

IEEE Medal in Power Engineering

For outstanding contributions to the technology associated with the generation, transmission, distribution, application, and utilization of electric power for the betterment of society.

SPONSORS: IEEE Industry Applications, IEEE Industrial Electronics, IEEE Power Electronics, and IEEE Power & Energy societies

IEEE Simon Ramo Medal

For exceptional achievement in systems engineering and systems science.

SPONSOR: Northrop Grumman Corp.

IEEE John von Neumann Medal

For outstanding achievements in computer-related science and technology.

SPONSOR: IBM

IEEE Corporate Innovation Award

For an outstanding innovation by an organization in an IEEE field of interest.

SPONSOR: IEEE

IEEE Honorary Membership

To individuals not members of the IEEE, who have rendered meritorious service to humanity in IEEE's designated fields of interest.

SPONSOR: IEEE

IEEE Theodore W. Hissey Outstanding Young Professional Award

Awarded to a young professional for contributions to the technical community and IEEE fields of interest.

SPONSOR: IEEE Young Professionals, and the IEEE Photonics and IEEE Power & Energy societies

IEEE Richard M. Emberson Award

For distinguished service advancing the technical objectives of the IEEE.

SPONSOR: IEEE Technical Activities Board

IEEE Haraden Pratt Award

For outstanding volunteer service to the IEEE.

SPONSOR: IEEE Foundation

If you have questions, email [email protected] or call +1 732 562 3844.

Intensive clinical collaboration is fueling growth of NYU Tandon’s biomedical engineering program

Dexter Johnson is a contributing editor at IEEE Spectrum, with a focus on nanotechnology.

This optical tomography device that can be used to recognize and track breast cancer, without the negative effects of previous imaging technology. It uses near-infrared light to shine into breast tissue and measure light attenuation that is caused by the propagation through the affected tissue.

This is a sponsored article brought to you by NYU’s Tandon School of Engineering.

When Andreas H. Hielscher, the chair of the biomedical engineering (BME) department at NYU’s Tandon School of Engineering, arrived at his new position, he saw raw potential. NYU Tandon had undergone a meteoric rise in its U.S. News & World Report graduate ranking in recent years, skyrocketing 47 spots since 2009. At the same time, the NYU Grossman School of Medicine had shot from the thirties to the #2 spot in the country for research. The two scientific powerhouses, sitting on opposite banks of the East River, offered Hielscher a unique opportunity: to work at the intersection of engineering and healthcare research, with the unmet clinical needs and clinician feedback from NYU’s world-renowned medical program directly informing new areas of development, exploration, and testing.

“There is now an understanding that technology coming from a biomedical engineering department can play a big role for a top-tier medical school,” said Hielscher. “At some point, everybody needs to have a BME department.”

In the early days of biomedical engineering departments nationwide, there was some resistance even to the notion of biomedical engineering: either you were an electrical engineer or a mechanical engineer. “That’s no longer the case,” said Hielscher. “The combining of the biology and medical aspects with the engineering aspects has been proven to be the best approach.”

Dr. Andreas Hielscher, NYU Tandon Biomedical Engineering Department Chair and head of the Clinical Biophotonics Laboratory, speaks with IEEE Spectrum about his work leveraging optical tomography for early detection and treatment monitoring for breast cancer.

The proof of this can be seen by the trend that an undergraduate biomedical degree has become one of the most desired engineering degrees, according to Hielscher. He also noted that the current Dean of NYU’s Tandon School of Engineering, Jelena Kovačević, has a biomedical engineering background, having just received the 2022 IEEE Engineering in Medicine and Biology Society career achievement award for her pioneering research related to signal processing applications for biomedical imaging.

Mary Cowman, a pioneer in joint and cartilage regeneration, began laying the foundations for NYU Tandon’s biomedical engineering department in the 2010s. Since her retirement in 2020, Hielscher has continued to grow the department through innovative collaborations with the medical school and medical center, including the recently-announced Translational Healthcare Initiative, on which Hielscher worked closely with Daniel Sodickson, the co-director of the medical school’s Tech4Health.

Andreas Hielscher joined NYU Tandon in 2020 as Professor and Chair of the Department of Biomedical Engineering.

NYU Tandon

“The fundamental idea of the Initiative is to have one physician from Langone Medical School, and one engineer at least—you could have multiple—and have them address some unmet clinical needs, some particular problem,” explained Hielscher. “In many cases they have already worked together, or researched this issue. What this initiative is about is to give these groups funding to do some experimentation to either prove that it won’t work, or demonstrate that it can and prioritize it.”

With this funding of further experimentation, it becomes possible to develop the technology to a point where you could begin to bring investors in, according Hielscher. “This mitigates the risk of the technology and helps attract potential investors,” added Hielscher. “At that point, perhaps a medical device company comes in, or some angel investor, and then you can get to the next level of investment for moving the technology forward.”

Hielscher himself has been leading research on developing new technologies within the Clinical Biophotonics Laboratory. One of the latest areas of research has been investigating the application of optical technologies to breast cancer diagnosis.

Cross sections of a breast with a tumor during a breath hold, taken with a dynamic optical tomographic breast imaging system developed by Dr. Hielscher, As a patient holds their breath, the blood concentration increases by up to 10 percent (seen in red). Dr. Hielscher’s team found that analyzing the increase and decrease in blood concentrations inside a tumor could help them determine which patients would respond to chemotherapy.

A.H. Hielscher, Clinical Biophotonics Laboratory

Hielscher and his colleagues have built a system that shines light through both breasts at the same time. By measuring how much light is reflected back it’s possible to generate maps of locations with high levels of oxygen and total hemoglobin, which may indicate tumors.

“We look at where there’s blood in the breast,” explained Hielscher. “Because breast tumors recruit new blood vessels, or, once they grow, they generate their own vascular network requiring more oxygen, wherever there is a tumor you will see an increase in total blood volume, and you will see more oxygenated blood.”

Initially, this diagnostic tool was targeted for early detection, since mammograms can only detect calcification in lower density breast tissue of women over a certain age. But it soon became clear in collaboration with clinical partners that it was also highly effective in monitoring treatment.

“Technology coming from a biomedical engineering department can play a big role for a top-tier medical school”

—Andreas H. Hielscher, Biomedical Engineering Department Chair, NYU Tandon

This realization came in part because of a recent change in cancer treatment that has moved towards what is known as neoadjuvant chemotherapy, in which chemotherapy drugs are administered before surgical extraction of the tumor. One of the drawbacks of this approach is that only around 60 percent of patients respond favorably to the chemotherapy, resulting in a large percentage of patients suffering through a grueling six-month-long chemotherapy treatment with minimal-to-no impact on the tumor.

With the optical technique, Hielscher and his colleagues have found that if they can detect a noticeable decrease of blood in targeted areas after two weeks, it’s very likely that the patient will respond to the chemotherapy. On the other hand, if they see that the amount of blood in that area stays the same, then there’s a very high likelihood that the patient will not respond to the therapy.

This same fundamental technique can also be applied to what is known as peripheral artery disease (PAD), which affects many patients with diabetes and involves the narrowing or blockage of the vessels that carry blood from the heart to the legs. An Israel-based company called VOTIS has licensed the technology for diagnosing and treating PAD.

Example of a frequency-domain image of a finger joint (proximal interphalangeal joint of index finger) affected by lupus arthritis.

A.H. Hielscher, Clinical Biophotonics Laboratory

While Hielscher’s work is in biophotonics, he recognized that the department has also quickly been developing a reputation in other emerging areas, including wearables, synthetic biology, and neurorehabilitation and stroke prediction.

Hielscher highlighted the recent work of Rose Faghih, working in smart wearables and data for mental health, Jef Boeke, a synthetic biology pioneer, and S. Farokh Atashzar, doing work in neurorehabilitation and stroke prediction. Atashzar’s work was highlighted last year in the pages of IEEE Spectrum.

“Rose Faghih is leveraging all kinds of sensors to make inferences about the mental state of patients, to determine if someone is depressed or schizophrenic, and then possibly have a feedback loop where you actually also treat them,” said Hielscher. “Jef Boeke is involved in what I term ‘wet engineering,’ and is currently involved in efforts to take cancer cells outside of the body to find a way to attack them, or reprogram them.”

As NYU Tandon’s BME department goes forward, Hielscher’s aim is that the department becomes a trusted source for the medical school, and that partnership enables key technologies to go from an unmet clinical need or an idea in a lab to a patient’s bedside in a 3-5 year timeframe.

“What I really would like, “Hielscher concluded, “is that if somebody in the medical school has a problem, the first thing they would say is, ‘Oh, I’ll call the engineering school. I bet there’s somebody there that can help me.’ We can work together to benefit patients, and we’re starting this already.”